This Thing Called 3D

Story Highlights

It has been a heck of a month for 3D announcements. Comcast carried The Final Destination in 3D on the day of its DVD release. The Consumer Electronics Show (CES) seemed all about 3D. The International Telecommunications Union (ITU) issued a report on 3D TV. The program recently posted for next month’s Hollywood Post Alliance (HPA) Tech Retreat includes not only a 3D-in-the-Home “supersession” but also other presentations on such issues as 3D gaming, 3D projection, 3D vision, and, from Adobe, 3D video stabilization. Electronic Engineering Times (EET) ran a story on January 21 about an agreement between France’s CEA-Leti and U.S. firm R3Logic “to develop 3D design methodologies for consumer and wireless applications.” And Computerworld on January 27 talked about 3D video graphics chips moving from games to medical imaging.

It has been a heck of a month for 3D announcements. Comcast carried The Final Destination in 3D on the day of its DVD release. The Consumer Electronics Show (CES) seemed all about 3D. The International Telecommunications Union (ITU) issued a report on 3D TV. The program recently posted for next month’s Hollywood Post Alliance (HPA) Tech Retreat includes not only a 3D-in-the-Home “supersession” but also other presentations on such issues as 3D gaming, 3D projection, 3D vision, and, from Adobe, 3D video stabilization. Electronic Engineering Times (EET) ran a story on January 21 about an agreement between France’s CEA-Leti and U.S. firm R3Logic “to develop 3D design methodologies for consumer and wireless applications.” And Computerworld on January 27 talked about 3D video graphics chips moving from games to medical imaging.

What does it all mean? That’s the sort of question one might ask after reading the front page of a newspaper, one carrying perhaps a dozen stories on different topics, because the 3D discussed in the above paragraph also covers multiple topics, not all of them associated with depth perception.

The EET story about 3D design for consumer applications, for example, was referring to layered circuits that might not have anything to do with images. Adobe’s 3D image stabilization presentation at the HPA Tech Retreat clearly has something to do with video, but, in this case, the three dimensions are height, width, and time. The 3D video-graphics chips in the Computerworld story do deal with the manipulation of objects in three-dimensional space, but the images of those objects are typically presented on flat screens that offer the same view even if one eye is shut.

The remaining stories in the first paragraph do have something to do with presenting some sort of view of our three-dimensional world beyond that offered by typical two-dimensional video imagery, but they cover an extraordinary range of possibilities. Perhaps it’s best to start with a closer look at our world.

We often discuss a human’s five senses, one of which we call feeling, but we can feel shape, texture, hardness, moisture, temperature, pain, hunger, thirst, and dizziness (among other things). Are those all the same sense? How do you feel about that question?

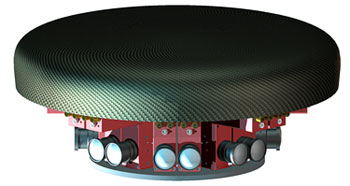

Fraunhofer panoramic stereoscopic camera rig

Setting aside TacTV, ScenTV, PalaTV, and even sound, our visible world still offers far more than any television system ever demonstrated. We can look around in every direction, including up and down. One of the presentations at the HPA Tech Retreat will be about “immersive-media” research at Germany’s Fraunhofer Institute, where there has been work on displaying imagery on a dome and on capturing stereoscopic images in a semi-circle, but neither of those fully covers our ability to look in any direction.

Perhaps the closest anyone has come is in the latest versions of the University of Illinois CAVE (Cave Automatic Virtual Environment), which project stereoscopic computer-graphic information on three walls and the floor and track the viewer’s visual orientation. Duke University’s DiVE (Duke immersive Virtual Environment) projects on all six faces of a cube and uses both head and hand tracking. For a viewer being tracked, the sensation is remarkable. But these rooms offer only what the computers can deliver, not live, real-world images.

Perhaps the closest anyone has come is in the latest versions of the University of Illinois CAVE (Cave Automatic Virtual Environment), which project stereoscopic computer-graphic information on three walls and the floor and track the viewer’s visual orientation. Duke University’s DiVE (Duke immersive Virtual Environment) projects on all six faces of a cube and uses both head and hand tracking. For a viewer being tracked, the sensation is remarkable. But these rooms offer only what the computers can deliver, not live, real-world images.

As for homes, there’s that report, issued on January 14 by Study Group 6 of the ITU’s Radiocommunications Sector (the people responsible for the global digital video standard Rec. 601). It provides “a roadmap for future 3D TV implementation.”

According to the report, the most-advanced 3D TV demonstrations at CES 2010 were merely part of “the first generation — ‘plano-stereoscopic television,'” of which more soon. It is to be followed by systems with “multiple views.”

Those aren’t systems with more camera positions or even a greater field of view, as in Fraunhofer’s panoramic system. Instead, multi-view 3D systems offer more than just the two views of two eyes, allowing a viewer to actually move a little and see around objects.

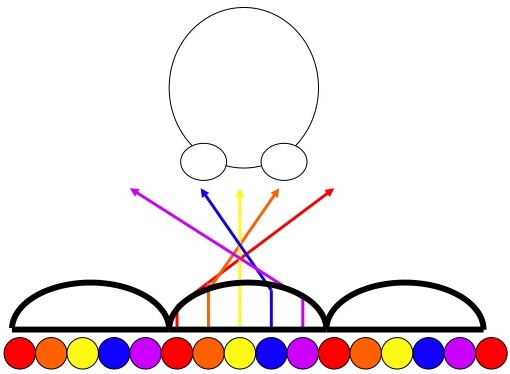

In his paper on no-glasses 3D (NG3D) presented at the most-recent SMPTE convention, Fox’s Thomas Edwards showed ways to do this. Above, shown with his permission, is an illustration of a lenticular (lens-based) version. The lenses and associated views are not to scale (they would be much smaller and there would probably be more views). As the viewer moves left and right, different views become available, as they do in the real world.

At the 2009 NAB show, NHK, the Japan Broadcasting Corporation, demonstrated a version of multi-view 3D they called “integral television,” wherein a multi-lens plate allows a camera to capture many views of the same scene, and a similar display system allows them to be seen. In these screen shots from the NHK system, it’s clear that, as the still camera shooting the integral display moves lower, it sees things differently from its upper position. It’s also clear that the image quality is not yet up to today’s HDTV, and that’s despite the fact that the images were shot by an ultra-high-definition (“8K”) camera with more than 33 million pixels per color.

At the 2009 NAB show, NHK, the Japan Broadcasting Corporation, demonstrated a version of multi-view 3D they called “integral television,” wherein a multi-lens plate allows a camera to capture many views of the same scene, and a similar display system allows them to be seen. In these screen shots from the NHK system, it’s clear that, as the still camera shooting the integral display moves lower, it sees things differently from its upper position. It’s also clear that the image quality is not yet up to today’s HDTV, and that’s despite the fact that the images were shot by an ultra-high-definition (“8K”) camera with more than 33 million pixels per color.

Even farther from today’s image quality was a tiny display across the aisle at NAB 2009 at the exhibit of Japan’s National Institute of Information and Communications Technology. Despite the small size of the image, it took a large room of equipment to produce it. It was a live, motion, color hologram.

NICT isn’t the only organization to have shown live holography, and some might argue that others look a little better. But there’s no question live holography still needs a lot of work before it is of home-entertainment quality.

Live holography is the third-generation system described in the ITU report, one that can “record the amplitude, frequency, and phase of light waves, to reproduce almost completely human beings’ natural viewing environment. These kinds of highly advanced systems are technically some 15-20 years away.”

NICT's live hologram at NAB 2009

It might be worth noting that, as electronic image capture and display moves towards holographic capability, traditional film-based holography is moving farther into the full-color, moving-image field. At the 2009 HPA Tech Retreat, RabbitHoles Media demonstrated white-light-illuminated, no-electronics holograms with as many as 1280 full-color full-motion frames built-in. They may be viewed in the galleries at the RabbitHoles site (one of my favorites is “Batman Dark Knight #1,” made for Six Flags): http://www.rabbitholes.com/entertainment-gallery/

If live, color, motion holography is 3D TV at its imagined future finest, what about 3D TV today? Reports associated with CES sometimes told of high percentages or numbers of 3D-compatible TVs being sold in the near future. But what does that mean? Comcast’s offering of The Final Destination in 3D was compatible with all TVs — sort of.

It was carried as “anaglyph” 3D. The word, for which English-language references can be found as early as 1651, literally means a three-dimensional carving. That it is now applied to colored 3D glasses indicates just how long they have been used to deliver appropriate stereoscopic views to appropriate eyes — except for certain problems (not counting the fact that they won’t work with black-&-white TV sets).

The traditional anaglyph color pair is red and cyan. In research performed at Curtin University of Technology in 2004 on 29 completely different pairs of such glasses, all but one pair (which had been made using an ink-jet printer on a transparency sheet) had superb acceptance of the colors it was supposed to transmit and just as superb rejection of the colors it wasn’t supposed to allow. Unfortunately, the screens the glasses were meant to look at, whether TV, computer, or projection, weren’t nearly as good. The wrong images got through, a condition known as ghosting http://cmst.curtin.edu.au/publicat/2004-08.pdf.

The traditional anaglyph color pair is red and cyan. In research performed at Curtin University of Technology in 2004 on 29 completely different pairs of such glasses, all but one pair (which had been made using an ink-jet printer on a transparency sheet) had superb acceptance of the colors it was supposed to transmit and just as superb rejection of the colors it wasn’t supposed to allow. Unfortunately, the screens the glasses were meant to look at, whether TV, computer, or projection, weren’t nearly as good. The wrong images got through, a condition known as ghosting http://cmst.curtin.edu.au/publicat/2004-08.pdf.

Other anaglyph problems are caused by differing levels of light from the two lenses (causing a condition called “eye rivalry”), poor color rendition, and even a difference in eye-lens focusing for red versus blue. The last issue has been addressed by some glasses with magnification applied to one lens, and different color combinations and strengths have been tried to deal with the others. A green and magenta pair reduced eye rivalry, and a dark-amber and dark-blue pair, amazingly, seems to improve color rendition, after the eyes have adapted to the dim images.

The attraction of anaglyph 3D is that it can be used (with varying degrees of success) in any system that can deal with color TV, which includes almost all TV sets and the signals that feed them. It’s also possible to reduce the depth of the 3D sensation to create images that may be considered compatible with ordinary, glasses-free 2D viewing.

In the 1970s, for example, Digital Optical Technology Systems used a horizontal-slit iris. The center of the slit was clear, the left had one color filter, and the right had another. Anything in focus would pass through the clear center. Anything out of focus because it was too close would pick up one set of color fringes; anything out of focus because it was too far would pick up the opposite set. Viewers with glasses got a 3D effect; viewers without them saw minor color fringes, but only on out-of-focus parts of the image.

Colored glasses haven’t been the only way of delivering 3D to ordinary TV sets. In 1953, when Business Week ran the headline “3-D Invades TV,” stereoscopic broadcasts carried the two eyes’ images side by side. Viewers wore prismatic glasses to direct their gaze appropriately (and, in one version, hung a filter plate over the screen, polarized in one direction on the left half and in the other on the right). Above at the left are the 1953 Stereographics glasses and at the right a 2009 Prisma-Chrome pair.

Colored glasses haven’t been the only way of delivering 3D to ordinary TV sets. In 1953, when Business Week ran the headline “3-D Invades TV,” stereoscopic broadcasts carried the two eyes’ images side by side. Viewers wore prismatic glasses to direct their gaze appropriately (and, in one version, hung a filter plate over the screen, polarized in one direction on the left half and in the other on the right). Above at the left are the 1953 Stereographics glasses and at the right a 2009 Prisma-Chrome pair.

Such side-by-side 3D is compatible with all TV sets, even black-&-white ones, though it does create vertically oriented images. So other compatible 3D systems have tried splitting the screen horizontally instead of vertically, for wide, over-under images. As in side-by-side systems, prismatic glasses were initially used, but, to get away from the problem of a single “sweet-spot” distance for viewing, LeaVision tried adjustable-mirror glasses. Shown below are KMQ prismatic viewers for over-under 3D.

Don’t like colored fringes or double images on a TV screen? There are still multiple techniques for getting 3D onto existing TV sets.

active-shutter adapter & glasses available today

Some of the latest schemes at CES involve active-shutter glasses. These have what are, in effect, single-pixel LCD screens in front of each eye. In one state, the LCD is clear, and the eye sees the TV picture; in the other, the LCD is opaque, and the eye sees nothing. If alternate-eye views are fed to the TV sequentially, and the glasses synchronously prevent the wrong eye from seeing what it shouldn’t, the result is stereoscopic viewing. If the rate of change is fast enough, viewers don’t notice flickering.

The new 3D TVs offer direct means for synchronizing the glasses to the images. But before they were even introduced, boxes were on the market (primarily for games) for controlling the glasses when ordinary (not specifically 3D) displays were used. So even active-shutter, alternating-view 3D can be compatible with existing TVs.

Hobbyists with more technical savvy can even modify their TVs to be able to use passive polarized glasses, but that’s beyond the realm of most viewers. So TVs using micro-polarized screens for linearly polarized glasses or switching plates to reverse the direction of circularly polarized images are (for the moment, at least) specialty products.

Then there’s so-called “wiggle-vision,” “wobble stereoscopy” or “flicker vision.” It comes in many forms. In the most basic, the two eye views are simply alternated (as in the active-glasses systems but at a much slower rate). Both eyes see both views and use the mechanism of parallax to provide depth cues.

Then there’s so-called “wiggle-vision,” “wobble stereoscopy” or “flicker vision.” It comes in many forms. In the most basic, the two eye views are simply alternated (as in the active-glasses systems but at a much slower rate). Both eyes see both views and use the mechanism of parallax to provide depth cues.

Unfortunately, both eyes also see a wiggling, wobbling, or flickering image. Vision III Imaging offers lenses and adaptors that provide a much more subtle version of the effect to achieve what they call “Depth Enhanced Video.” It has been used on major broadcasts. You can judge the effect for yourself at this site: http://www.inv3.com/index.html

There are even more TV-compatible 3D systems using inexpensive glasses — or even no glasses at all — and they are compatible with ordinary 2D viewing. But they’re not truly stereoscopic.

One class uses the Pulfrich effect, named for the professor who wrote it up after it was brought to his attention. Despite being an acknowledged 3D expert, Carl Pulfrich was blind in one eye and couldn’t experience the effect himself.

In Pulfrich 3D, one eye is darkened. According to one explanation, the darkened eye is forced towards rod-based (scotopic) vision, which uses a longer chemical process than cone-based (photopic) vision. The undarkened eye sees what is; the darkened eye sees what just was. If the motion in the image is appropriately controlled — say the foreground always moving left to right and the background right to left — viewers with one eye darkened get a 3-D effect.

Pulfrich 3D has successfully broadcast such major events as the Tournament of Roses Parade and The Rolling Stones Steel Wheels Tour. But the choreography is critical. A spinning carousel is perfect Pulfrich material; a sports event in which players might move in any direction is not.

Pulfrich 3D has successfully broadcast such major events as the Tournament of Roses Parade and The Rolling Stones Steel Wheels Tour. But the choreography is critical. A spinning carousel is perfect Pulfrich material; a sports event in which players might move in any direction is not.

Then there’s the chromostereoscopic effect. Our eyes have simple lenses, and simple lenses cannot focus on different colors at the same time. The muscles focusing our lenses send feedback to the brain, so red can seem to be in front of blue.

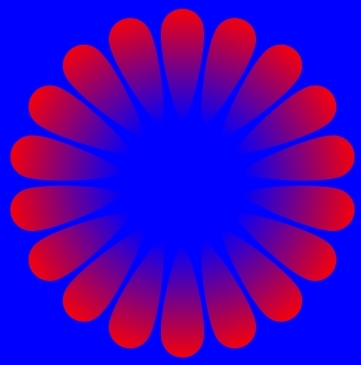

Above is a portion of a picture called “Apparatus to Talk to Aliens,” copyright 2005 by Professor Akiyoshi Kitaoka of the Department of Psychology of Ritsumeikan University in Kyoto, Japan. It was created to demonstrate chromostereopsis and is used here with permission. If you have a sensation that the red “petals” are funneling inwards, you are experiencing the chromostereoscopic effect. You may find the complete picture (and other examples) at this site: http://www.psy.ritsumei.ac.jp/~akitaoka/scolor2e.html

Above is a portion of a picture called “Apparatus to Talk to Aliens,” copyright 2005 by Professor Akiyoshi Kitaoka of the Department of Psychology of Ritsumeikan University in Kyoto, Japan. It was created to demonstrate chromostereopsis and is used here with permission. If you have a sensation that the red “petals” are funneling inwards, you are experiencing the chromostereoscopic effect. You may find the complete picture (and other examples) at this site: http://www.psy.ritsumei.ac.jp/~akitaoka/scolor2e.html

Chromostereopsis is not a very strong effect, but it can be enhanced with color-shifting glasses. American Paper Optics (source of the anaglyph and Pulfrich glasses shown earlier) sells ChromaDepth glasses to do just that. They’ve been used for VH1’s I Love the 80s in 3D. There’s more information here: http://www.3dglassesonline.com/3d-chromadepth-glasses/

Chromostereopsis is not a very strong effect, but it can be enhanced with color-shifting glasses. American Paper Optics (source of the anaglyph and Pulfrich glasses shown earlier) sells ChromaDepth glasses to do just that. They’ve been used for VH1’s I Love the 80s in 3D. There’s more information here: http://www.3dglassesonline.com/3d-chromadepth-glasses/

As in the Pulfrich effect, attention must be paid to the choreography of the images. Reddish colors must be kept in the foreground and blueish in the background, though that’s common in many outdoor scenes. In fact, at the New Technology Campus at the 2009 International Broadcasting Convention, Canada’s Communications Research Centre demonstrated a 2D-to-3D real-time conversion system based on color alone.

Even ignoring chromostereoscopy and the Pulfrich effect, however, there are clearly many ways to deliver 3D to existing TV sets. So, when it is reported that some portion of TV sets will be 3D-compatible in the future, why isn’t it 100%?