The Blind Leading

Story Highlights

Once upon a time, people were prevented from getting married, in some jurisdictions, based on the shade of their skin colors. Once upon a time, a higher-definition image required more pixels on the image sensor and higher-quality optics.

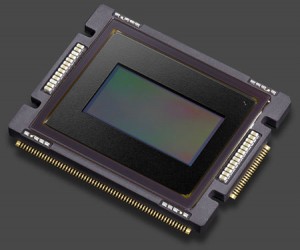

Actually, we still seem to be living in the era indicated by the second sentence above. At the 2012 Hollywood Post Alliance (HPA) Tech Retreat, to be held February 14-17 (with a pre-retreat seminar on “The Physics of Image Displays” on the 13th) at the Hyatt Grand Champions in Indian Wells, California <http://bit.ly/slPf9v>, one of the earliest panels in the main program will be about 4K cameras, and representatives from ARRI, Canon, JVC, Red, Sony, and Vision Research will all talk about cameras with far more pixel sites on their image sensors than there are in typical HDTV cameras; Sony’s, shown at the left, has roughly ten times as many.

Actually, we still seem to be living in the era indicated by the second sentence above. At the 2012 Hollywood Post Alliance (HPA) Tech Retreat, to be held February 14-17 (with a pre-retreat seminar on “The Physics of Image Displays” on the 13th) at the Hyatt Grand Champions in Indian Wells, California <http://bit.ly/slPf9v>, one of the earliest panels in the main program will be about 4K cameras, and representatives from ARRI, Canon, JVC, Red, Sony, and Vision Research will all talk about cameras with far more pixel sites on their image sensors than there are in typical HDTV cameras; Sony’s, shown at the left, has roughly ten times as many.

![]() That’s by no means the limit. The prototypical ultra-high-definition television (UHDTV) camera shown at the right has three image sensors (from Forza Silicon), each one of which has about 65% more pixel sites than on Sony’s sensor. There is so much information being gathered that each sensor chip requires a 720-pin connection (and Sony’s image sensor is intended for use in just a single-sensor camera, so there are actually about five times more pixel sites). But even that isn’t the limit! As I pointed out last year, Canon has already demonstrated a huge hyper-definition image sensor, with four times the number of pixels of even those Forza image sensors used in the camera at the right <http://www.schubincafe.com/2010/09/07/whats-next/>!

That’s by no means the limit. The prototypical ultra-high-definition television (UHDTV) camera shown at the right has three image sensors (from Forza Silicon), each one of which has about 65% more pixel sites than on Sony’s sensor. There is so much information being gathered that each sensor chip requires a 720-pin connection (and Sony’s image sensor is intended for use in just a single-sensor camera, so there are actually about five times more pixel sites). But even that isn’t the limit! As I pointed out last year, Canon has already demonstrated a huge hyper-definition image sensor, with four times the number of pixels of even those Forza image sensors used in the camera at the right <http://www.schubincafe.com/2010/09/07/whats-next/>!

Having entered the video business at a time when picture editing was done with razor blades, iron-filing solutions to make tape tracks visible, and microscopes, and when video projectors utilized oil reservoirs and vacuum pumps, I’ve always had a fondness for the physical characteristics of equipment. Sensors will continue to increase in resolution, and I love that work. At the same time, I recognize some of the problems of an inexorable path towards higher definition.

The standard-definition camera that your computer or smart phone uses for video conferencing might have an image sensor with a resolution characterized as 640×480 or 0.3 Mpel (megapixels), even if that same smart phone has a much-higher-resolution image sensor pointing the other way for still pictures. That’s because video must make use of continually changing information. At 60 frames per second, that 0.3 Mpel camera delivers more pixels in one second than an 18 Mpel sensor shooting a still image.

Common 1080-line HDTV has about 2 Mpels. So called “4K” has about 8 Mpels. It’s already tough to get a great HDTV lens; how will we deal with UHDTV’s 33-Mpel “8K”?

A frame rate of 60-fps delivers twice as much information as 30-fps; 120-fps is twice as much as 60-fps. How will we ever manage to process high-frame-rate UHDTV?

Perhaps it’s worth consulting the academies. In U.S. entertainment media, the highest awards are granted by the Academy of Motion Picture Arts & Sciences (the Academy Award or Oscar), the Academies (there are two) of Television Arts & Sciences (the Emmy Award), and the Recording Academy (the Grammy Award). Win all three, and you are entitled to go on an EGO (Emmy-Grammy-Oscar) trip!

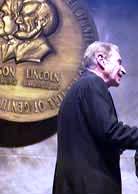

In the history of those awards, only 33 people have ever achieved an EGO trip. And only two of those also won awards from the Audio Engineering Society (AES), the Institute of Electrical and Electronics Engineers (IEEE), and the Society of Motion-Picture and Television Engineers (SMPTE). You’re probably familiar with the last name of at least one of those two, Ray Dolby, shown at left during his induction into the National Inventors Hall of Fame in 2004.

In the history of those awards, only 33 people have ever achieved an EGO trip. And only two of those also won awards from the Audio Engineering Society (AES), the Institute of Electrical and Electronics Engineers (IEEE), and the Society of Motion-Picture and Television Engineers (SMPTE). You’re probably familiar with the last name of at least one of those two, Ray Dolby, shown at left during his induction into the National Inventors Hall of Fame in 2004.

The other was Thomas Stockham. Some in the audio community might recognize his name. He was at one time president of the AES, is credited with creating the first digital-audio recording company (Soundstream), and was one of the investigators of the 18½-minute gap in then-President Richard Nixon’s White House tapes regarding the Watergate break-in.

The other was Thomas Stockham. Some in the audio community might recognize his name. He was at one time president of the AES, is credited with creating the first digital-audio recording company (Soundstream), and was one of the investigators of the 18½-minute gap in then-President Richard Nixon’s White House tapes regarding the Watergate break-in.

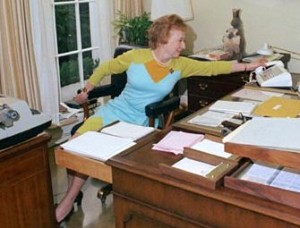

Those achievements appeal to my sense of appreciation of physical characteristics. The Soundstream recorder (right) was large and had many moving parts. And the famous “stretch” of Nixon’s secretary Rose Mary Woods (left), which would have been required to accidentally cause the gap in the recording, is a posture worthy of an advanced yogi (Stockham’s investigative group, unfortunately for that theory, found that there were multiple separate instances of erasure, which could not have been caused by any stretch). But what impressed (and still impresses) me most about Stockham’s work has no physical characteristics at all. It’s pure mathematics.

Those achievements appeal to my sense of appreciation of physical characteristics. The Soundstream recorder (right) was large and had many moving parts. And the famous “stretch” of Nixon’s secretary Rose Mary Woods (left), which would have been required to accidentally cause the gap in the recording, is a posture worthy of an advanced yogi (Stockham’s investigative group, unfortunately for that theory, found that there were multiple separate instances of erasure, which could not have been caused by any stretch). But what impressed (and still impresses) me most about Stockham’s work has no physical characteristics at all. It’s pure mathematics.

On the last day of the HPA Tech Retreat, as on the first day, there will be a presentation on high-resolution imaging. But it will have a very different point of view. Siegfried Foessel of Germany’s Fraunhofer research institute will describe “Increasing Resolution by Covering the Image Sensor.” The idea is that, instead of using a higher-resolution sensor, which increases data-readout rates, it’s actually possible to use a much-lower-resolution image sensor, with the pixel sites covered in a strange pattern (a portion of which is shown at the right). Mathematical processing then yields a much-higher-resolution image — without increasing the information rate leaving the sensor.

On the last day of the HPA Tech Retreat, as on the first day, there will be a presentation on high-resolution imaging. But it will have a very different point of view. Siegfried Foessel of Germany’s Fraunhofer research institute will describe “Increasing Resolution by Covering the Image Sensor.” The idea is that, instead of using a higher-resolution sensor, which increases data-readout rates, it’s actually possible to use a much-lower-resolution image sensor, with the pixel sites covered in a strange pattern (a portion of which is shown at the right). Mathematical processing then yields a much-higher-resolution image — without increasing the information rate leaving the sensor.

In the HPA Tech Retreat demo room, there should be multiple demonstrations of the power of mathematical processing. Cube Vision and Image Essence, for example, are expected to be demonstrating ways of increasing apparent sharpness without even needing to place a mask over the sensor. Lightcraft Technology will show photorealistic scenes that never even existed except in a computer. And those are said to have gigapixel (thousand-megapixel) resolutions!

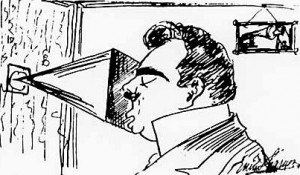

All of that mathematical processing, to the best of my knowledge, had no direct link to Stockham, but he did a lot of mathematical processing, too. In the realm of audio, his most famous effort was probably the removal of the recording artifacts of the acoustical horn into which the famous opera tenor Enrico Caruso sang in the era before microphone-based recording (shown at left in a drawing by the singer, himself).

All of that mathematical processing, to the best of my knowledge, had no direct link to Stockham, but he did a lot of mathematical processing, too. In the realm of audio, his most famous effort was probably the removal of the recording artifacts of the acoustical horn into which the famous opera tenor Enrico Caruso sang in the era before microphone-based recording (shown at left in a drawing by the singer, himself).

As Caruso sang, the sound of his voice was convolved with the characteristics of the acoustic horn that funneled the sound to the recording mechanism. Recovering the original sound for the 1976 commercial release Caruso: A Legendary Performer required deconvolving the horn’s acoustic characteristics from the singer’s voice. That’s tough enough even if you know everything there is to know about the horn. But Stockham didn’t, so he had to use “blind” deconvolution. It wasn’t the first time.

He was co-author of an invited paper that appeared in the Proceedings of the IEEE in August 1968. It was called “Nonlinear Filtering of Multiplied and Convolved Signals,” and, while some of it applied to audio signals, other parts applied to images. He followed up with a solo paper, “Image Processing in the Context of a Visual Model,” in the same journal in July 1972. Both papers have been cited many hundreds of times in more-recent image-processing work.

He was co-author of an invited paper that appeared in the Proceedings of the IEEE in August 1968. It was called “Nonlinear Filtering of Multiplied and Convolved Signals,” and, while some of it applied to audio signals, other parts applied to images. He followed up with a solo paper, “Image Processing in the Context of a Visual Model,” in the same journal in July 1972. Both papers have been cited many hundreds of times in more-recent image-processing work.

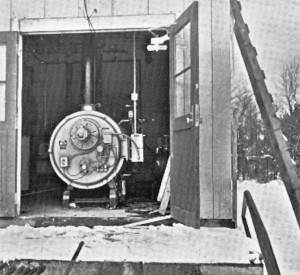

One image in both papers showed the outside of a building, shot on a bright day; the door was open, but the inside was little more than a black hole (a portion of the image is shown above left, including artifacts of scanning the print article with its half-tone images). After processing, all of the details of the equipment inside could readily be seen (a portion of the image is shown at right, again including scanning artifacts). Other images showed effective deblurring, and the blur could be caused by either lens defocus or camera instability.

One image in both papers showed the outside of a building, shot on a bright day; the door was open, but the inside was little more than a black hole (a portion of the image is shown above left, including artifacts of scanning the print article with its half-tone images). After processing, all of the details of the equipment inside could readily be seen (a portion of the image is shown at right, again including scanning artifacts). Other images showed effective deblurring, and the blur could be caused by either lens defocus or camera instability.

Stockham later (in 1975) actually designed a real-time video contrast compressor that could achieve similar effects. I got to try it. I aimed a bright light up at some shelves so that each shelf cast a shadow on what it was supporting. Without the contrast compressor, virtually nothing on the shelves could be seen; with it, fine detail was visible. But the pictures were not really of entertainment quality.

That was, however, in 1975, and technology has marched — or sprinted — ahead since then. The Fraunhofer Institut presentation at the 2012 HPA Tech Retreat will show how math can increase image-sensor resolution. But what about the lens?

A lens convolves an image in the same way that an old recording horn convolved the sound of an acoustic gramophone recording. And, if the defects of one can be removed by blind deconvolution, so might those of the other. An added benefit is that the deconvolution need not be blind; the characteristics of the lens can be identified. Today’s simple chromatic-aberration corrections could extend to all of a lens’s abberations, and even its focus and mount stability.

Is it a merely a dream? Perhaps. But, at one time, so was the repeal of so-called anti-miscegenation laws.