The Light Fantastic

Story Highlights

Here are some questions: Why is the man in the picture above holding radioactive sheets of music? What is the strange apparatus behind him? What does it have to do with Emmy awards given to Mitsubishi and Shuji Nakamura this January? And what is the relationship of all of that to the phrase “trip the light fantastic”?

Next month the International Broadcasting Convention (IBC) in Amsterdam will reveal such innovations in media technology as hadn’t yet appeared in the seemingly endless cycle of trade shows. At last year’s IBC, for example, Sony introduced the HDC-2500 three-CCD camera. It is perhaps four times as sensitive as its basic predecessor, the HDC-1500, while maintaining its standardized 2/3-inch image format.

Next month the International Broadcasting Convention (IBC) in Amsterdam will reveal such innovations in media technology as hadn’t yet appeared in the seemingly endless cycle of trade shows. At last year’s IBC, for example, Sony introduced the HDC-2500 three-CCD camera. It is perhaps four times as sensitive as its basic predecessor, the HDC-1500, while maintaining its standardized 2/3-inch image format.

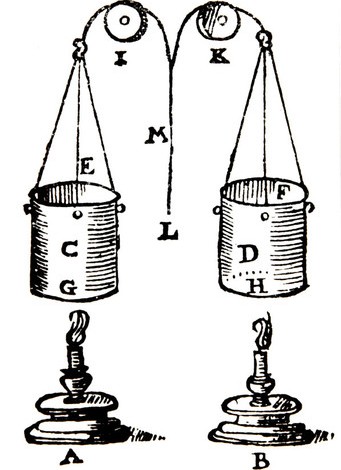

Other cameras have other image formats. Some popular models use CMOS (instead of CCD) image sensors with sizes approximately the same as those of a 35-mm movie-film frame. Some are even larger than that. The larger sizes and different technologies can mean increased sensitivity. Increased sensitivity, in turn, can mean less light needed for proper exposure. But that doesn’t necessarily mean fewer lighting instruments. As shown in the diagram at right, from Bill Fletcher’s “Bill’s Light” NASA web page (http://grcitc.grc.nasa.gov/how/video/video.cfm), a typical simple video lighting setup uses three light sources, and increasing camera sensitivity won’t drop it to two or one.

Other cameras have other image formats. Some popular models use CMOS (instead of CCD) image sensors with sizes approximately the same as those of a 35-mm movie-film frame. Some are even larger than that. The larger sizes and different technologies can mean increased sensitivity. Increased sensitivity, in turn, can mean less light needed for proper exposure. But that doesn’t necessarily mean fewer lighting instruments. As shown in the diagram at right, from Bill Fletcher’s “Bill’s Light” NASA web page (http://grcitc.grc.nasa.gov/how/video/video.cfm), a typical simple video lighting setup uses three light sources, and increasing camera sensitivity won’t drop it to two or one.

Perhaps it’s best to start at the beginning, and lighting is the beginning of the electronic image-acquisition process. Whether you prefer a biblical or big-bang origin, in the beginning there was light. The light came from stars (of which our sun is one), and it was bright, as depicted in the image at left, by Lykaestria (http://en.wikipedia.org/wiki/File:The_sun1.jpg). But, in the beginning of video, it wasn’t bright enough. The first person to achieve a recognizable image of a human face (John Logie Baird) and the first person to achieve an all-electronic video image (Philo Taylor Farnsworth) both had to use artificial lighting because their cameras weren’t sensitive enough to pick up images even in direct sunlight, which ranges between roughly 30,000 and 130,000 lux.

Perhaps it’s best to start at the beginning, and lighting is the beginning of the electronic image-acquisition process. Whether you prefer a biblical or big-bang origin, in the beginning there was light. The light came from stars (of which our sun is one), and it was bright, as depicted in the image at left, by Lykaestria (http://en.wikipedia.org/wiki/File:The_sun1.jpg). But, in the beginning of video, it wasn’t bright enough. The first person to achieve a recognizable image of a human face (John Logie Baird) and the first person to achieve an all-electronic video image (Philo Taylor Farnsworth) both had to use artificial lighting because their cameras weren’t sensitive enough to pick up images even in direct sunlight, which ranges between roughly 30,000 and 130,000 lux.

There are roughly 10.764 lux in the old American and English unit of illuminance, the foot-candle. Candles have been used for artificial lighting for so long that they became part of the language of light: foot-candle, candlepower — even lux is short for candela steradian per square meter. General Electric once promotionally offered the foot candle shown at right (in an image, used here with permission, by Greg Van Antwerp from his Video Martyr blog, where you can also see what’s written on the sole, http://videomartyr.blogspot.com/2009/01/foot-candle.html).

There are roughly 10.764 lux in the old American and English unit of illuminance, the foot-candle. Candles have been used for artificial lighting for so long that they became part of the language of light: foot-candle, candlepower — even lux is short for candela steradian per square meter. General Electric once promotionally offered the foot candle shown at right (in an image, used here with permission, by Greg Van Antwerp from his Video Martyr blog, where you can also see what’s written on the sole, http://videomartyr.blogspot.com/2009/01/foot-candle.html).

As long ago as the middle of the 19th century, when Michael Faraday began giving lectures on “The Chemical History of a Candle,” candles were very similar to today’s: hard cylinders that burned down, consuming both the fuel and the wick, and emitting a relatively constant amount of light (which is how candle became a term of light measurement). Before those lectures, however,  candles were typically soft, smoky, stinky, sometimes toxic, and highly variable in light output, at least in part because their wicks weren’t consumed as the candles burned. Instead, the wicks had to be trimmed frequently, often with the use of candle “snuffers,” specialized scissors with attached boxes to catch the falling wicks, as shown at left in an ornate version (around 1835) from the Victoria and Albert Museum. This link offers more info: http://collections.vam.ac.uk/item/O77520/snuffers-j-hobday/.

candles were typically soft, smoky, stinky, sometimes toxic, and highly variable in light output, at least in part because their wicks weren’t consumed as the candles burned. Instead, the wicks had to be trimmed frequently, often with the use of candle “snuffers,” specialized scissors with attached boxes to catch the falling wicks, as shown at left in an ornate version (around 1835) from the Victoria and Albert Museum. This link offers more info: http://collections.vam.ac.uk/item/O77520/snuffers-j-hobday/.

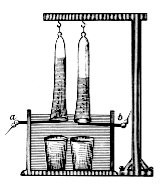

Then came electricity. It certainly changed lighting, but not initially in the way you might think. Famous people are said to be “in the limelight.” The term comes from an old theatrical lighting system in which oxygen and hydrogen were burned and their flame directed at a block or cylinder of calcium oxide (quicklime), which then glowed due to both incandescence and candoluminescence (the candle, again). It could be called the first practical incandescent light. It was so bright that it was often used for spotlights. As for the electric part, the hydrogen and oxygen were gathered in bags by electrolysis of water. At right is an electrolysis system developed by Johann Wilhelm Ritter in 1800.

Then came electricity. It certainly changed lighting, but not initially in the way you might think. Famous people are said to be “in the limelight.” The term comes from an old theatrical lighting system in which oxygen and hydrogen were burned and their flame directed at a block or cylinder of calcium oxide (quicklime), which then glowed due to both incandescence and candoluminescence (the candle, again). It could be called the first practical incandescent light. It was so bright that it was often used for spotlights. As for the electric part, the hydrogen and oxygen were gathered in bags by electrolysis of water. At right is an electrolysis system developed by Johann Wilhelm Ritter in 1800.

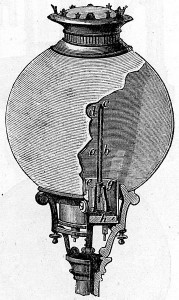

Next electricity made possible the practical arc light, first used for entertainment at the Princess’s Theatre in London in 1848. In keeping with the candle theme, one version (shown at left) was called Jablochkoff’s candle. But its light was so harsh and bright (said to be brighter than the sun, itself), that theaters continued to use gas lighting instead.

Next electricity made possible the practical arc light, first used for entertainment at the Princess’s Theatre in London in 1848. In keeping with the candle theme, one version (shown at left) was called Jablochkoff’s candle. But its light was so harsh and bright (said to be brighter than the sun, itself), that theaters continued to use gas lighting instead.

Like candles and oil lamps before it, gas lighting generated lots of heat, consumed oxygen, generated carbon dioxide, and caused fires. Unlike the candles and oil lamps, gas lights could be dimmed simultaneously throughout a theater (though there was a candle-dimming apparatus, below, depicted in a book published in 1638). But gas lights couldn’t be blacked out completely and then re-lit without people igniting each jet — until 1866, that is. That’s when electricity made its third advance in lighting: It provided instant ignition for gas lights at the Prince of Wales’s Theatre in Liverpool.

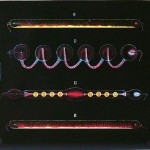

Actually, there was an earlier contribution of electricity to lighting. In 1857, Heinrich Geissler evacuated the air from a glass tube, inserted another gas, and then ran a current through electrodes at either end, causing the gas to glow. As shown at right in a drawing from the 1869 physics book Traité Élémentaire de Physique, however, Geissler tubes were initially used more to provide something to look at rather than providing light for looking at other things (click the image for an enlargement). They were, effectively, the opposite of arc lights.

Actually, there was an earlier contribution of electricity to lighting. In 1857, Heinrich Geissler evacuated the air from a glass tube, inserted another gas, and then ran a current through electrodes at either end, causing the gas to glow. As shown at right in a drawing from the 1869 physics book Traité Élémentaire de Physique, however, Geissler tubes were initially used more to provide something to look at rather than providing light for looking at other things (click the image for an enlargement). They were, effectively, the opposite of arc lights.

The first practical incandescent electric lamps, whether you prefer Joseph Swan or Thomas Edison (or his staff) as the source, appeared around 1880 and were used for entertainment lighting almost immediately at such theaters as the Savoy in London, the Mahen in Brno, the  Palais Garnier in Paris, and the Bijou in Boston. At about the same time, inventors began offering their proposals for another invention: television. As the diagram at left (from the November 7, 1890 issue of The Telegraphic Journal and Electrical Review), of Henry Sutton’s 1885 version, called the “telephane” (the word television wouldn’t be coined until 1900), shows, however, the newfangled incandescent lamp wasn’t yet to be trusted; the telephane receiver used an oil lamp as its light source.

Palais Garnier in Paris, and the Bijou in Boston. At about the same time, inventors began offering their proposals for another invention: television. As the diagram at left (from the November 7, 1890 issue of The Telegraphic Journal and Electrical Review), of Henry Sutton’s 1885 version, called the “telephane” (the word television wouldn’t be coined until 1900), shows, however, the newfangled incandescent lamp wasn’t yet to be trusted; the telephane receiver used an oil lamp as its light source.

When Baird (1925) and Farnsworth (1927) first demonstrated their television systems, the light sources in their receiver displays were a neon lamp (a version of a Geissler tube) and a cathode-ray tube (CRT) respectively, but the light sources used to illuminate the scenes for the cameras were incandescent light bulbs. Baird’s first human subject, William Edward Taynton, actually fled the camera because he was afraid the hot lights would set his hair on fire. Farnsworth initially used an unfeeling photograph as his subject. When he graduated to a live human (his wife, Elma, known as Pem, shown at right) in 1929, she kept her eyes closed so as not to be blinded by the intense illumination.

When Baird (1925) and Farnsworth (1927) first demonstrated their television systems, the light sources in their receiver displays were a neon lamp (a version of a Geissler tube) and a cathode-ray tube (CRT) respectively, but the light sources used to illuminate the scenes for the cameras were incandescent light bulbs. Baird’s first human subject, William Edward Taynton, actually fled the camera because he was afraid the hot lights would set his hair on fire. Farnsworth initially used an unfeeling photograph as his subject. When he graduated to a live human (his wife, Elma, known as Pem, shown at right) in 1929, she kept her eyes closed so as not to be blinded by the intense illumination.

The CRT, developed in 1897 and first used (in a version shown at left) to display video images in 1907, is also a tube through which a current flows, but its light (like that of a fluorescent lamp) comes not directly from a glowing gas but from excitation of phosphors (chemicals that emit light when stimulated by an electron beam or such forms of electromagnetic radiation as ultraviolet light). But, if it’s a light source, why not use it as one?

The CRT, developed in 1897 and first used (in a version shown at left) to display video images in 1907, is also a tube through which a current flows, but its light (like that of a fluorescent lamp) comes not directly from a glowing gas but from excitation of phosphors (chemicals that emit light when stimulated by an electron beam or such forms of electromagnetic radiation as ultraviolet light). But, if it’s a light source, why not use it as one?

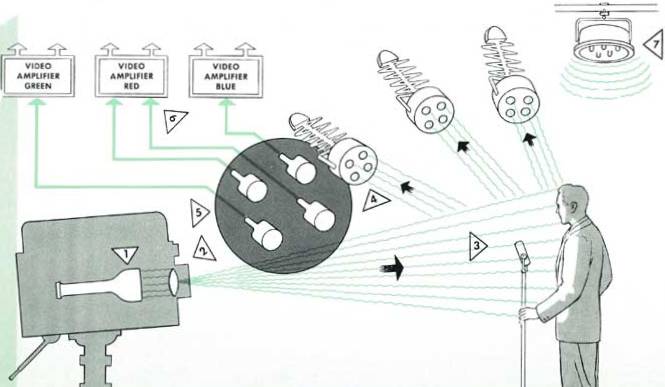

Actually, the first use of a scanned light source in video acquisition involved an arc light instead of a CRT. As shown below, in a 1936 brochure about Ulises Sanabria’s theater television system (reproduced on the web site of the excellent Early Television Museum, here: http://www.earlytelevision.org/sanabria_theater_tv.html), the device behind the person with the radioactive music at the top of the post is a television camera. But it works backwards. The light source is a carbon arc, focused into a narrow beam and scanned television style. The large disks surrounding the camera opening, which appear to be lights, are actually photocells to pick up light reflected by the subject from the scanned light beam.

Unfortunately, the photocells could also pick up any other light, so the studio had to be completely dark except for the “flying spot” scanning the image area. That made it impossible to read music, thus the invention of music printed in radium ink on black paper. The radium glow was sufficient to make out the notes but too weak to interfere with the reflected camera light.

Of course, the studio was still dark, which made moving around difficult. Were it not for the fact that the phrase “trip… the light fantastick” appears in a 1645 poem by John Milton, one might suspect it was a description of such a studio. The scanned beam of “the light fantastic” emerged from the camera, and, because it was the only light in the room, everyone had to be careful not to trip. Inventor Allen B. DuMont came up with a solution called Vitascan, shown below in an image from a 1956 brochure at the Early Television Museum: http://www.earlytelevision.org/dumont_vitascan_brochure.html

Again, the camera works backwards: Light emerges from it from a scanned CRT, and photomultiplier tubes pick it up. This being a color-television system, there are photomultiplier tubes for each color. Even though the light emerges from the camera, the pickup assemblies can be positioned like lights for shadows and modeling and can even be “dimmed.” It’s the “sync-lite” (item 7) at the upper right, however, that eliminated the trip hazard. Its lamps would flash on for only 100 millionths of a second at a time, synchronized to the vertical blanking interval, a period when no image scanning takes place, providing bright task illumination without affecting even the most contrasty mood lighting. In that sense, Vitascan worked even better than today’s lighting.

Vitascan wasn’t the only time high-brightness CRTs were used to replace incandescent lamps. Consider Mitsubishi’s Diamond Vision stadium giant video displays. The first (above left) was installed at Dodger Stadium in 1980. It used flood-beam CRTs (above right), one per color, as its illumination source, winning Mitsubishi an engineering Emmy award this January.

Vitascan wasn’t the only time high-brightness CRTs were used to replace incandescent lamps. Consider Mitsubishi’s Diamond Vision stadium giant video displays. The first (above left) was installed at Dodger Stadium in 1980. It used flood-beam CRTs (above right), one per color, as its illumination source, winning Mitsubishi an engineering Emmy award this January.

In the same category (“pioneering development of emissive technology for large, outdoor video screens”) another award was given to a Japan-born inventor. Although he once worked for a company that made phosphors for CRTs, he’s more famous for the invention that won him the Emmy award. It’s described in the exciting book Brilliant! by Bob Johnstone (Prometheus 2007). The book covers not only the inventor but also his invention. It’s subtitled Shuji Nakamura and the Revolution in Lighting Technology.

In the same category (“pioneering development of emissive technology for large, outdoor video screens”) another award was given to a Japan-born inventor. Although he once worked for a company that made phosphors for CRTs, he’s more famous for the invention that won him the Emmy award. It’s described in the exciting book Brilliant! by Bob Johnstone (Prometheus 2007). The book covers not only the inventor but also his invention. It’s subtitled Shuji Nakamura and the Revolution in Lighting Technology.

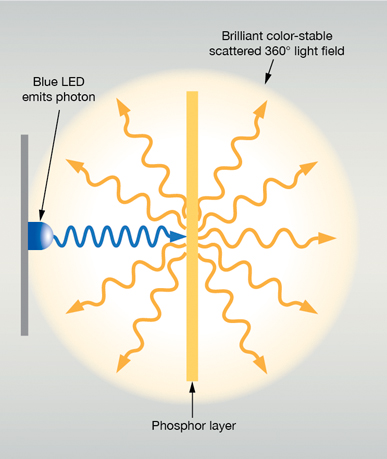

Nakamura came up with the first high-brightness pure blue LED, followed by the high-brightness pure green LED. Those led not only to LED-based giant video screens but also to the white LED (sometimes created from a blue LED with a yellow phosphor). And bright, white LEDs led to the rapid replacement of seemingly almost all other forms of lighting in many moving-image productions.

Those who attended the annual NAB equipment exposition in Las Vegas in April couldn’t avoid seeing LED lighting equipment. But they might also have noticed some dissension. There was, for example, PRG’s TruColor line with “Remote Phosphor Technology.” Remember the “pure blue” and “pure green” characterizations of Nakamura’s inventions? If those colors happen to match the blue and green photosensitivities of camera image sensors, all is well. If not, colors can be misrepresented. So PRG TruColor moves the phosphors away from the LEDs (the “remote” part), creating a more diffuse light with what they tout as better color performance–a higher color-rendering index (http://www.prgtrucolor.com/content/remote-phosphor).

Those who attended the annual NAB equipment exposition in Las Vegas in April couldn’t avoid seeing LED lighting equipment. But they might also have noticed some dissension. There was, for example, PRG’s TruColor line with “Remote Phosphor Technology.” Remember the “pure blue” and “pure green” characterizations of Nakamura’s inventions? If those colors happen to match the blue and green photosensitivities of camera image sensors, all is well. If not, colors can be misrepresented. So PRG TruColor moves the phosphors away from the LEDs (the “remote” part), creating a more diffuse light with what they tout as better color performance–a higher color-rendering index (http://www.prgtrucolor.com/content/remote-phosphor).

Hive, also at NAB, claims a comparably high CRI for its lights, but they don’t use LEDs at all. Instead, they’re plasma. They’re not plasma in the sense of using a flat-panel TV as a light source; they’re plasma in the physics sense. They use ionized gas.

Hive, also at NAB, claims a comparably high CRI for its lights, but they don’t use LEDs at all. Instead, they’re plasma. They’re not plasma in the sense of using a flat-panel TV as a light source; they’re plasma in the physics sense. They use ionized gas.

Unlike Geissler tubes, however, Hive’s plasma lamps (from Luxim) don’t have electrodes. The tiny lamps (right) are said by their maker to emit as much as 45,000 lumens each and to achieve 70% of their initial output even after 50,000 hours (about six years of being illuminated non-stop).

If they don’t have electrodes, what makes them light up? Luxim provides FAQs here http://www.luxim.com/technology/plasma-lighting-faq, which describe the radio-frequency field used, but Hive’s site, http://www.hivelighting.com/ gets into specific frequencies. “Hive’s lights are completely flicker-free up to millions of frames per second at any frame rate or shutter angle. Operating at 450 million hertz, we’re still waiting for high-speed cameras to catch up.”