IBC-ing the Future

Story Highlights

This is what happened on Monday night, September 10, at the International Broadcasting Convention (IBC) in Amsterdam: A crowd crammed into the large (1750-seat) auditorium to see the future–well, a future. They saw Hugo in stereoscopic 3D.

This is what happened on Monday night, September 10, at the International Broadcasting Convention (IBC) in Amsterdam: A crowd crammed into the large (1750-seat) auditorium to see the future–well, a future. They saw Hugo in stereoscopic 3D.

The movie, itself, is hardly futuristic. It was released in 2011, and it takes place almost a century ago.

So was it, perhaps, astoundingly, glasses-free? No. And it wasn’t the first 3D movie screened at IBC. It wasn’t even the first of IBC 2012; Prometheus was shown two nights earlier. But it was a special event. According to one participant who had previously seen Hugo stereoscopically, “It was awesome–like a different movie!”

The big deal? The perceived screen brightness was that of a well-projected 2D movie, perhaps four to five times greater than that of typical stereoscopic 3D movie projection.

The big deal? The perceived screen brightness was that of a well-projected 2D movie, perhaps four to five times greater than that of typical stereoscopic 3D movie projection.

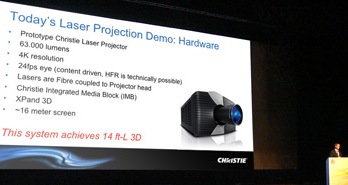

It was said to be the world’s first laser-projected screening of a full-length movie (and in stereoscopic 3D), and it used an astoundingly bright, 63,000-lumen Christie Digital projector. Above left is a picture of Christie’s Dr. Don Shaw discussing it before the screening. You can read more about it in Christie’s press release here: http://www.christiedigital.com/en-us/news-room/press-releases/Pages/Worlds-First-Laser-Projected-Screening-of-Full-Length-Movie-Debuts-With-Christie-Laser-Projector.aspx

Can you buy that projector? Not today and maybe never. That’s why the audience saw only a possible future, but IBC has a pretty terrific track record of predicting the future. Today, for example, television is digital–whether via broadcast, cable, satellite, internet, or physical media–and virtual sets and virtual graphics are common; both digital TV and virtual video could be found at IBC in 1990, part of which is shown below.

As can be seen from Sony’s giant white “beach cabana” above, IBC had outgrown the convention facilities in Brighton, England, where it was located that year. The following convention (in 1992, because it was held every two years at the time) moved to Amsterdam’s RAI convention center, which has been adding new exhibit halls seemingly each year to try to keep up (there are now 14).

After the move, IBC became an annual event, show-goers could relax on a sand beach (left) and eat raw herring served by people in klederdracht (right), and finding the futures became easier. They were stuck into a Future Zone.

After the move, IBC became an annual event, show-goers could relax on a sand beach (left) and eat raw herring served by people in klederdracht (right), and finding the futures became easier. They were stuck into a Future Zone.

It’s not that everything new was put into the Future Zone. At IBC 2011, in a regular exhibit hall, Sony introduced its HDC-2500, one of the most-advanced CCD cameras ever made; at IBC 2012, Grass Valley introduced the LDX series, based on their CMOS Xensium sensor, perhaps one of the most-advanced lines of CMOS cameras ever made. And they’re supposed to be upgradable–someday–to the high-dynamic-range mode I showed in my coverage of IBC 2009 here: http://www.schubincafe.com/2009/09/20/walkin-in-a-camera-wonderland/

ARRI makes cameras that, in theory, at least, compete with those Grass Valley and Sony ones. ARRI also makes lighting instruments. The future of lighting (and much of the present) seems to be LED-based. But ARRI demonstrated in its booth how some LED lights can produce wildly different looking colors on different cameras, including (labeled by brand) Grass Valley and Sony. Their point was that ARRI’s new L7-T (tungsten color-temperature) LED lighting (left) looks pretty much the same on those different cameras.

ARRI makes cameras that, in theory, at least, compete with those Grass Valley and Sony ones. ARRI also makes lighting instruments. The future of lighting (and much of the present) seems to be LED-based. But ARRI demonstrated in its booth how some LED lights can produce wildly different looking colors on different cameras, including (labeled by brand) Grass Valley and Sony. Their point was that ARRI’s new L7-T (tungsten color-temperature) LED lighting (left) looks pretty much the same on those different cameras.

Grass Valley’s LDX line drew crowds, but whether they came for the engineering of the cameras or to look at the leggy model in hot pants shouldering one was not entirely clear. IBC 2012 set a new official attendance record, but, with 14 exhibit halls (well, 13 plus one devoted to meetings rooms), not every exhibitor had crowds all the time, even if they were deserved. Consider Silentair’s mobile unit (right). It’s rated at a noise level of NR15 (comparable to the U.S. NC15). According to EngineeringToolBox.com, concert halls and recording studios often use the much looser NR25 rating. The unit comes in multiple colors and can be installed in about half an hour by unskilled labor.

Grass Valley’s LDX line drew crowds, but whether they came for the engineering of the cameras or to look at the leggy model in hot pants shouldering one was not entirely clear. IBC 2012 set a new official attendance record, but, with 14 exhibit halls (well, 13 plus one devoted to meetings rooms), not every exhibitor had crowds all the time, even if they were deserved. Consider Silentair’s mobile unit (right). It’s rated at a noise level of NR15 (comparable to the U.S. NC15). According to EngineeringToolBox.com, concert halls and recording studios often use the much looser NR25 rating. The unit comes in multiple colors and can be installed in about half an hour by unskilled labor.

Where might it be used? Perhaps in or near Dreamtek’s Broadcastpod (left). About the size of an old amusement-park photo booth (near left), it comes complete with HD camera, prompter glass (far left), media management, and behind-the-talent graphics. It’s also well lit, acoustically treated and ventilated.

Where might it be used? Perhaps in or near Dreamtek’s Broadcastpod (left). About the size of an old amusement-park photo booth (near left), it comes complete with HD camera, prompter glass (far left), media management, and behind-the-talent graphics. It’s also well lit, acoustically treated and ventilated.

There were many other delights in the exhibit halls, from a Boeing 737 simulator with real-time, near-photorealistic graphics 17,920 pixels wide from Professional Show (right) to theatrically presented 810-frame-per-second (fps) 4K images shot by the For-A FT-One.

There were many other delights in the exhibit halls, from a Boeing 737 simulator with real-time, near-photorealistic graphics 17,920 pixels wide from Professional Show (right) to theatrically presented 810-frame-per-second (fps) 4K images shot by the For-A FT-One.  There were Geniatech’s USB-stick pay-TV tuner with replaceable security card (left) and the Fraunhofer Institut’s work on computational photography (like using the masked-pixels resolution-expansion system described at this year’s HPA Tech Retreat to increase dynamic range, too) and light-field capture for motion video.

There were Geniatech’s USB-stick pay-TV tuner with replaceable security card (left) and the Fraunhofer Institut’s work on computational photography (like using the masked-pixels resolution-expansion system described at this year’s HPA Tech Retreat to increase dynamic range, too) and light-field capture for motion video.

The most light-field equipment at the show could be found at the European Union’s 3D VIVANT project booth in the Future Zone. Hungary’s Holografika showed its Holovizio light-field display. In perhaps their most-amazing demo, they depicted five playing cards, edge-on to the screen. From the front, they looked like five vertical lines, but the photos above, shot at their IBC location (complete with extraneous reflections), show (not quite as well as being there) what they looked like from left or right.

The most light-field equipment at the show could be found at the European Union’s 3D VIVANT project booth in the Future Zone. Hungary’s Holografika showed its Holovizio light-field display. In perhaps their most-amazing demo, they depicted five playing cards, edge-on to the screen. From the front, they looked like five vertical lines, but the photos above, shot at their IBC location (complete with extraneous reflections), show (not quite as well as being there) what they looked like from left or right.

One could walk from one edge of the display to the other, and the view was always appropriate to the angle and offered 1280 x 768 resolution to each eye at each location. Unfortunately, that meant that the whole display was close to 80 megapixels, and no camera system at IBC could provide matching pictures.

One could walk from one edge of the display to the other, and the view was always appropriate to the angle and offered 1280 x 768 resolution to each eye at each location. Unfortunately, that meant that the whole display was close to 80 megapixels, and no camera system at IBC could provide matching pictures.

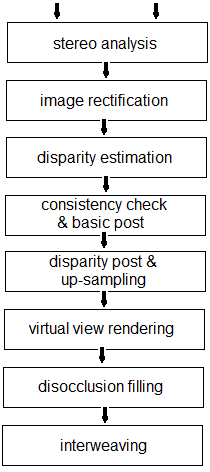

The top award-winning IBC conference paper was “Fully Automatic Conversion of Stereo to Multiview for Autostereoscopic Displays” by Christian Riechert and four other authors from Fraunhofer’s image processing department. The process is shown at right.

Holografika showed some upconversions from stereoscopic views, but those didn’t fully utilize the capability of their display. In fact, none of the autostereoscopic displays at IBC could (in my opinion) match the glasses-required versions. One of the best-looking of the latter was a Sony 4K LCD using alternate-line polarization; with passive glasses, it offered 3840 x 1080 simultaneously to each eye.

Right behind Holografika in the 3D VIVANT booth, however, was Brunel University, and they had some camera systems that might, someday, properly stimulate something like the Holovizio display. At left is one of their holoscopic lens adaptors on an ARRI Alexa camera. The long tube is just for relaying the image, and, by the end of the show, they added a small, easily hand-holdable prototype without the relay tubes. The Brunel University area also featured a crude-resolution glasses-free display made from an LCD computer monitor and not much thicker than the unmodified original.

Right behind Holografika in the 3D VIVANT booth, however, was Brunel University, and they had some camera systems that might, someday, properly stimulate something like the Holovizio display. At left is one of their holoscopic lens adaptors on an ARRI Alexa camera. The long tube is just for relaying the image, and, by the end of the show, they added a small, easily hand-holdable prototype without the relay tubes. The Brunel University area also featured a crude-resolution glasses-free display made from an LCD computer monitor and not much thicker than the unmodified original.

Across the aisle from the 3D VIVANT booth, DeCS Media was showing another way to capture 3D for autostereoscopic display with a single lens–that is, a single image-capturing lens (any lens on any camera) and a DeCS Media module to capture depth information (as shown at right). Even Fraunhofer’s Christian Riechert, in the Q&A session following the presentation of the award-winning paper, pointed out that, if separate depth information is available, the process of multi-view generation is simplified. DeCS Media says their process works live (though disocclusion would require additional processing).

Across the aisle from the 3D VIVANT booth, DeCS Media was showing another way to capture 3D for autostereoscopic display with a single lens–that is, a single image-capturing lens (any lens on any camera) and a DeCS Media module to capture depth information (as shown at right). Even Fraunhofer’s Christian Riechert, in the Q&A session following the presentation of the award-winning paper, pointed out that, if separate depth information is available, the process of multi-view generation is simplified. DeCS Media says their process works live (though disocclusion would require additional processing).

There was something else of interest in the 3D VIVANT booth: the Institut für Rundfunktechnik’s MARVIN (Microphone Array for Realtime and Versatile INterpolation), a ball (left), about the size of a soccer ball, containing microphones that capture sound in 3D and can be configured in many different ways. The IRT demoed MARVIN with position-sensing headphones; as the listener moved, the sound vectors changed appropriately.

There was something else of interest in the 3D VIVANT booth: the Institut für Rundfunktechnik’s MARVIN (Microphone Array for Realtime and Versatile INterpolation), a ball (left), about the size of a soccer ball, containing microphones that capture sound in 3D and can be configured in many different ways. The IRT demoed MARVIN with position-sensing headphones; as the listener moved, the sound vectors changed appropriately.

Looking even more like a soccer ball was Panospective’s ball camera (shown at right in an image by Jonas Pfeil, http://jonaspfeil.de/ballcamera). It can be thrown (and, perhaps, even kicked), and, when it reaches its maximum height, its multiple cameras (36 of them!) capture images covering 360 degrees spherically. Viewers holding a tablet can see any part of the image, seamlessly, by moving the tablet around.

Looking even more like a soccer ball was Panospective’s ball camera (shown at right in an image by Jonas Pfeil, http://jonaspfeil.de/ballcamera). It can be thrown (and, perhaps, even kicked), and, when it reaches its maximum height, its multiple cameras (36 of them!) capture images covering 360 degrees spherically. Viewers holding a tablet can see any part of the image, seamlessly, by moving the tablet around.

The Panospective ball’s images might be spatial, but they are neither stereoscopic nor light field. The same might be said of Mediapro Research’s Project FINE demonstrations. Using a few cameras–not necessarily shooting from every direction–they can reconstruct the space in which an event is captured and place virtual cameras anywhere within it (even “shooting” straight down from a non-existent aircraft). In just the few months since their demo at the NAB convention, they seem to have advanced considerably.

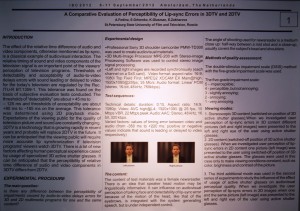

Another stereoscopic-3D revelation in the Future Zone related to lip-sync. It was printed on a couple of posters from St. Petersburg State University of Film and Television in Russia. The researchers, A. Fedina, E. Grinenko, K. Glasman, and E. Zakharova, shot a typical news-anchor setup in 3D and then tested sensitivity to lip sync in both 2D and shutter-glasses stereoscopic 3D. One poster is shown at left. Their experimental results show that 3D viewers are almost twice as sensitive as 2D viewers to audio-leading-video lip-sync error (27 ms vs. 50).

Another stereoscopic-3D revelation in the Future Zone related to lip-sync. It was printed on a couple of posters from St. Petersburg State University of Film and Television in Russia. The researchers, A. Fedina, E. Grinenko, K. Glasman, and E. Zakharova, shot a typical news-anchor setup in 3D and then tested sensitivity to lip sync in both 2D and shutter-glasses stereoscopic 3D. One poster is shown at left. Their experimental results show that 3D viewers are almost twice as sensitive as 2D viewers to audio-leading-video lip-sync error (27 ms vs. 50).

The IBC 2012 Future Zone was by no means limited to 3D. Other posters covered such topics as integrating social media into media asset management and using crowdsourcing to add metadata to archives.

Social media and crowdsourcing suggest personal computers and hand-held devices–the legendary second and third screens. But viewers appear to over-report new-media use and under-report plain, non-DVR, television viewing. How can we know what viewers actually do?

Social media and crowdsourcing suggest personal computers and hand-held devices–the legendary second and third screens. But viewers appear to over-report new-media use and under-report plain, non-DVR, television viewing. How can we know what viewers actually do?

One exhibitor at the IBC 2012 Future Zone was Actual Customer Behaviour. With permission, they spy on actual viewers as they actually use various screens. Then experts in advertising, anthropology, behavior, ethnography, marketing, and psychology figure out what’s going on, including engagement. Their 1-3-9 Media Lab, for example, is named for the nominal viewing distances (in feet) of handheld devices, computer screens, and TV screens. But lab head Sarah Pearson notes that TV viewing distance can vary significantly just from leaning back when relaxing or leaning in with excitement.

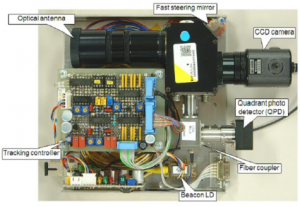

There were other technology demonstrations. Japan’s National Institute of Information and Communications Technology, which, in the past, has shown such amazing technologies as holographic video and tactile-feedback (with aroma!), had a possibly more practical but no less amazing compact free-space optical link with autotracking and a current capacity of 1.28 terabits per second (enough to carry more than 860 uncompressed HD-SDI channels).

There were other technology demonstrations. Japan’s National Institute of Information and Communications Technology, which, in the past, has shown such amazing technologies as holographic video and tactile-feedback (with aroma!), had a possibly more practical but no less amazing compact free-space optical link with autotracking and a current capacity of 1.28 terabits per second (enough to carry more than 860 uncompressed HD-SDI channels).

There were still more Future Zone exhibitors, such as the BBC, Korea’s Electronics and Telecommunications Research Institute, and Nippon Telephone and Telegraph. And, outside the Future Zone, one could find such exhibitors as the European SAVAS project for live, automated subtitling. Then there was NHK, the Japan Broadcasting Corporation, whose Science and Technology Research Laboratories (STRL) won IBC’s highest award this year, the International Honour for Excellence. NHK’s STRL is where modern HDTV originated and where its possible replacement, ultra HDTV, with 16 times more pixels than normal 1920 x 1080 HDTV, is still being perfected.

Part of NHK’s exhibit at the IBC 2012 Future Zone was two 85-inch UHDTV LCD screens showing material shot at the Olympic Games in London this summer. NHK has previously shown UHDTV via projection on a large screen. The 1-3-9 powers-of-three viewing-distance progression might continue to 27-feet for a multiplex cinema screen and 81 feet for IMAX, but NHK’s Super Hi-Vision (their term for UHDTV) was always viewed from closer distances. The 85-inch direct-view screens were attractive in a literal sense. They attracted viewers to get closer and closer to the screens to see fine detail.

Another NHK Super Hi-Vision (SHV) demo involved shooting and displaying at 120 frames per second (fps) instead of 60. At far right above is the camera used. Just above the lens is a display showing 120-fps images and to its left one showing 60-fps. The difference in sharpness was dramatic. But to the right of the 120-fps images and to the left of the 60-fps were static portions of the image, and they looked sharper than either moving version. At the left in the picture above is the moving belt the SHV camera was shooting, and it looked sharper than even the 120-fps images.

So maybe 120-fps isn’t the limit. Maybe it should be more like 300-fps. Might that appear at some future IBC? Actually, it was described (and demonstrated) at IBC 2008: http://www.bbc.co.uk/rd/pubs/whp/whp169.shtml