Leg Asea

Story Highlights

2013 HPA Tech Retreat Broadcasters Panel: ABC, CBC, CBS, Ericsson, Fox, NAB, NBC, and PBS are shown (not in order); EBU, NHK, Sinclair, Univision, and locals were also present

Joe Zaller, a manager of the very popular (16,000-member) Television Broadcast Technologies group on LinkedIn, tweeted on February 21 from the 2013 HPA Tech Retreat in Indian Wells, California: “Pretty much blown away from how much I learned Wednesday at [the broadcasters] panel… just wish it had been longer.”

Adam Wilt, in his huge, six-part, 14-web-page (each page perhaps 20 screens long) coverage of the five-day event for ProVideoCoalition.com put it this way: “When you get many of the best and brightest in the business together in a conference like this, it’s like drinking from a fire hose. That’s why my notes are only a faint shadow of the on-site experience. Sorry, but you really do have to be there for the full experience”: http://provideocoalition.com/awilt/story/hpa-tech-retreat-wrap-up

In his Display Central coverage, Peter Putman called it “one of the leading cutting-edge technology conferences for those working in movie and TV production”: http://www.display-central.com/free-news/display-daily/4k-in-the-desert-2013-hpa-tech-retreat/. The European Broadcasting Union’s technology newsletter noted of the retreat, held in the Southern California desert, “There were also many European participants at HPA 2013, in particular from universities, research institutes and the supplier industry. It has clearly become an annual milestone conference for technology strategists and experts in the media field”: http://tech.ebu.ch/news/new-media-technology-on-the-agenda-at-te-22feb13

It was all those things and more. HPA is the Hollywood Post Alliance, but the event is older than HPA itself. It is by no means restricted to Hollywood (presenters included the New Zealand team that worked on the high-frame-rate production of The Hobbit and the NHK lab in Japan that shot the London Olympics in 8K), and it’s also not restricted to post. This year’s presentations touched on lighting, lenses, displays, archiving, theatrical sound systems, and even viewer behavior while watching one, two, or even three screens at once.

It is cutting-edge high tech–the lighting discussed included wireless plasmas, the displays brightnesses as high as 20,000 cd/m² (and as low as 0.0027), and the archiving artificial, self-replicating DNA–and yet there was a recognition of a need to deal with legacy technologies as well. Consider that ultra-high-dynamic-range (HDR) display.

It is cutting-edge high tech–the lighting discussed included wireless plasmas, the displays brightnesses as high as 20,000 cd/m² (and as low as 0.0027), and the archiving artificial, self-replicating DNA–and yet there was a recognition of a need to deal with legacy technologies as well. Consider that ultra-high-dynamic-range (HDR) display.

The simulator created by Dolby for HDR preference testing is shown at left, minus the curtains that prevented light leakage. About the only way to achieve sufficient brightness today is to have viewers look into a high-output theatrical projector. In tests, viewers preferred levels far beyond those available in today’s home or theatrical displays. But a demonstration at the retreat seemed to come to a different conclusion.

The SMPTE standard for cinema-screen brightness, 196M, calls for 16 foot-lamberts or 55 cd/m² with an open gate (no film in the projector). With film, peak white is about 14 fL or 48 cd/m², a lot lower than 20,000. Whether real-world movie theaters achieve even 48–especially for 3D–is another matter.

During the “More, Bigger, But Better?” super-session at the retreat, a non-depolarizing screen (center at right) was set up, the audience put on 3D glasses, and scenes were projected in 3D at just 4.5 fL (15 cd/m²) and again at 12 fL (41 cd/m²). The audience clearly preferred the latter.

During the “More, Bigger, But Better?” super-session at the retreat, a non-depolarizing screen (center at right) was set up, the audience put on 3D glasses, and scenes were projected in 3D at just 4.5 fL (15 cd/m²) and again at 12 fL (41 cd/m²). The audience clearly preferred the latter.

Later, however, RealD chief scientific officer Matt Cowan showed a scene from an older two-dimensional movie at 14, 21, and 28 fL (48, 72, and 96 cd/m²). This time, the audience (but not everyone in the audience) seemed to prefer 21 to 28. Cowan led a breakfast roundtable one morning on the question “Is There a ‘Just Right’ for Cinema Brightness?”

Of course, as Dolby’s brightness-preference numbers showed, a quick demo is not the same as a test, and people might be reacting simply to the difference between what they are used to and what they were shown. The same might be the case with reactions to the high-frame-rate (HFR) 48 frames per second (48 fps) of The Hobbit. When the team from Park Road Post in New Zealand showed examples in their retreat presentation, it certainly looked different from 24-fps material, but whether it was better or worse was a subjective decision that will likely change with time. There were times when the introduction of sound or color were also deemed detrimental to cinematic storytelling.

At least the standardized cinema brightness of 14 fL had a technological basis in arc light sources and film density. A presentation at the retreat revealed the origin of the 24-fps rate and showed that it had nothing to do with visual or aural perception or technological capability; it was just a choice made by Western Electric’s Stanley Watkins (left) after speaking with Warner Bros. chief projectionist Jack Kekaley. And we’ve gotten used to that choice for 88 years.

At least the standardized cinema brightness of 14 fL had a technological basis in arc light sources and film density. A presentation at the retreat revealed the origin of the 24-fps rate and showed that it had nothing to do with visual or aural perception or technological capability; it was just a choice made by Western Electric’s Stanley Watkins (left) after speaking with Warner Bros. chief projectionist Jack Kekaley. And we’ve gotten used to that choice for 88 years.

Today, of course, actual strands of film have nothing to do with the moving-images business–or do they? Technicolor’s Josh Pines noted a newspaper story explaining the recent crop of lengthy movies by saying that digital technology lets directors go longer because they don’t have to worry about the cost of film stock. But Pines analyzed those movies and found they they had actually, for the most part, been shot on film.

Film is also still used for archiving. Major studio blockbusters, even those shot, edited, and projected electronically, are transferred to three strands of black-&-white film (via a color-separation process), even though that degrades the image quality, for “just-in-case” disaster recovery; b&w film is the only moving-image medium to have thus far lasted more than a hundred years.

At one of the 2013 HPA Tech Retreat breakfast roundtables (right) one morning, the head of archiving for a major studio shocked others by revealing they were no longer archiving on film. At the same roundtable, however, studios acknowledged that whenever a new restoration technology is developed, they go to the oldest available source, not a more-recent restoration.

At one of the 2013 HPA Tech Retreat breakfast roundtables (right) one morning, the head of archiving for a major studio shocked others by revealing they were no longer archiving on film. At the same roundtable, however, studios acknowledged that whenever a new restoration technology is developed, they go to the oldest available source, not a more-recent restoration.

If film brightness, frame rate, and archiving are legacies of the movie business, what about television? There was much discussion at the retreat of beyond-HDTV resolutions and frame rates. Charles Poynton’s seminar on the technology of high[er] frame rates explained why some display technologies don’t have a problem with them while others do.

Other legacies of early television also appeared at the retreat. Do we still need the 0.999000… frame-rate-reduction factor of NTSC color in an age of Ultra-HD? It’s being argued in those beyond-HD standards groups today.

Interlace and its removal appeared in multiple presentations and even in a demo by isovideo in the demo room (a tiny portion of which is shown at left). As with film restoration from the original, the demo recommended archiving interlaced video as such and using the best-available de-interlacer when necessary. And there appeared to be a consensus at the retreat that conversion from progressive to interlace for legacy distribution is not a problem.

Interlace and its removal appeared in multiple presentations and even in a demo by isovideo in the demo room (a tiny portion of which is shown at left). As with film restoration from the original, the demo recommended archiving interlaced video as such and using the best-available de-interlacer when necessary. And there appeared to be a consensus at the retreat that conversion from progressive to interlace for legacy distribution is not a problem.

There was no such consensus about another legacy of early television, the 4:3 aspect ratio. One of the retreat’s nine quizzes asked who intentionally invented the 16:9 aspect ratio (for what was then called advanced television), what it was called, and why it was created. The answers (all nine quizzes had winners) were: Joseph Nadan of Philips Labs, 5-1/3:3, and because it was considered the minimum change from 4:3 that would be seen as a valuable-enough difference to make consumers want to buy new TV sets. But that was in 1983.

Thirty years later, the retreat officially opened with a “Technology Year in Review,” which called 2013 the “27th (or 78th) Annual ‘This Is the Year of HDTV.'” It noted that, although press feeds often still remain analog NTSC, according to both Nielsen and Leichtman research in 2012 75% of U.S. households had HDTVs. Leichtman added that 3/5 of all U.S. TVs, even in multi-set households, were HDTV. Some of the remainder, even if not HDTV, might have a 16:9 image shape. So why continue to shoot and protect for a 4:3 sub-frame of the 16:9?

On the broadcasters panel, one U.S. network executive explained the decision by pointing to other Nielsen data showing that, as of July 15 of 2012, although roughly 76% of U.S. households had HDTVs (up 14% from the previous year), in May only 29% of English-language broadcast viewing was in HD and only 25% of all cable viewing. Furthermore, much of the legacy equipment feeding the HDTV sets is not HD capable.

A device need not be HD capable, however, to be able to carry a 16:9 image. Every piece of 4:3 equipment ever built can carry a 16:9 image, even if 4:3 image displays will show it squeezed. So the question seems to be whether it’s better for a majority of U.S. TV sets to get the horizontally stretched picture above left or a minority to get the horizontally squeezed picture at right.

A device need not be HD capable, however, to be able to carry a 16:9 image. Every piece of 4:3 equipment ever built can carry a 16:9 image, even if 4:3 image displays will show it squeezed. So the question seems to be whether it’s better for a majority of U.S. TV sets to get the horizontally stretched picture above left or a minority to get the horizontally squeezed picture at right.

What do actual viewers think about legacy technologies? Two sessions scarily provided a glimpse. A panel of students, studying in the moving-image field, offered some comments that included a desire to text during cinema viewing. And Sarah Pearson of Actual Customer Behaviour in the UK showed sequences shot (with permission) in viewer homes on both sides of the Atlantic, analyzed by the 1-3-9 Media Lab (example above left). Viewers’ use of other media while watching TV might shock, but old photos of families gathered around the television often depicted newspapers and books in hand.

What do actual viewers think about legacy technologies? Two sessions scarily provided a glimpse. A panel of students, studying in the moving-image field, offered some comments that included a desire to text during cinema viewing. And Sarah Pearson of Actual Customer Behaviour in the UK showed sequences shot (with permission) in viewer homes on both sides of the Atlantic, analyzed by the 1-3-9 Media Lab (example above left). Viewers’ use of other media while watching TV might shock, but old photos of families gathered around the television often depicted newspapers and books in hand.

It wasn’t only legacy viewing that was challenged at the retreat. Do cameras need lenses? There was a mention of meta-materials-based computational imaging.

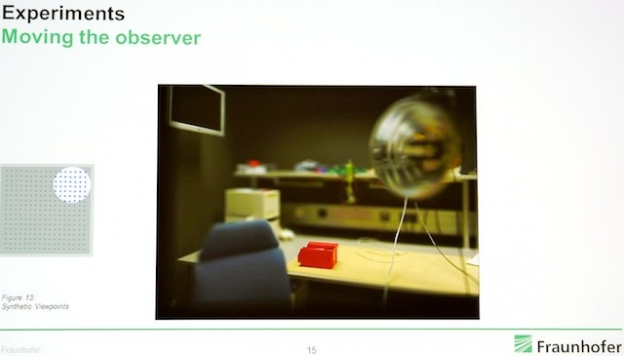

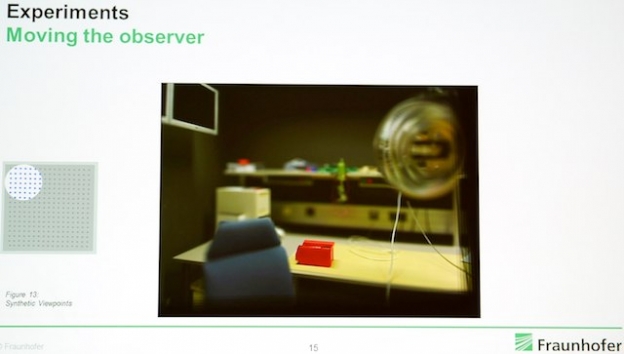

Do cameras need to move to change viewpoint, or can that be done in post? Below are two slides from “The Design of a Lightfield Camera,” a presentation by by Siegfried Foessel of Germany’s Fraunhofer Institut (as shot off the screen by Adam Wilt for his ProVideoCoalition.com coverage of the retreat: http://provideocoalition.com/awilt/story/hpa-tech-retreat-day-4). Look at the left of the top of the chair and what’s behind it.