Enabling the Fix

Story Highlights

Sometimes cliches are true. Sometimes the check is in the mail. And sometimes you can fix it in post. Amazingly, the category of what you can fix might be getting a lot bigger.

At this month’s NAB show, there was the usual parade of new technology, from Sonic Notify’s near-ultrasonic smartphone signaling for extremely local advertising — on the order of two meters or so (palmable transducer shown at left) to Japan’s National Institute of

At this month’s NAB show, there was the usual parade of new technology, from Sonic Notify’s near-ultrasonic smartphone signaling for extremely local advertising — on the order of two meters or so (palmable transducer shown at left) to Japan’s National Institute of Information and Communications Technology’s TV “white space” transmissions per IEEE 802.22. In shooting, for those who like the large-sensor image characteristics of the ARRI Alexa but need the “systemization” of a typical studio/field camera, there was the Ikegami HDK-97ARRI (right), with the front end of the former and the back end of the latter.

Information and Communications Technology’s TV “white space” transmissions per IEEE 802.22. In shooting, for those who like the large-sensor image characteristics of the ARRI Alexa but need the “systemization” of a typical studio/field camera, there was the Ikegami HDK-97ARRI (right), with the front end of the former and the back end of the latter.

Even where items weren’t entirely new, there was great progress to be seen. Dolby’s autostereoscopic (no glasses) 3D demo (left) has come a long way in one year. So has the European Project FINE, which can create a virtual-camera viewpoint almost anywhere, based on just a few normally positioned cameras. Last year, there was a lot of processing time per frame; this year, the viewpoint repositioning was demonstrated in real-time.

Even where items weren’t entirely new, there was great progress to be seen. Dolby’s autostereoscopic (no glasses) 3D demo (left) has come a long way in one year. So has the European Project FINE, which can create a virtual-camera viewpoint almost anywhere, based on just a few normally positioned cameras. Last year, there was a lot of processing time per frame; this year, the viewpoint repositioning was demonstrated in real-time.

If you’re more interested in displays, consider what’s been going on in direct-view LED video. It started out in outdoor stadium displays, where long viewing distances would hide the visibility of the individual LEDs. At NAB 2013, two companies, Leyard (right) and SiliconCore, showed systems with 1.9-mm pixel pitch, leaving the LED structure virtually invisible even at home viewing distances. Is “virtually” not good enough? SiliconCore also showed their new Magnolia panel, with a pitch of just 1.5 mm!

If you’re more interested in displays, consider what’s been going on in direct-view LED video. It started out in outdoor stadium displays, where long viewing distances would hide the visibility of the individual LEDs. At NAB 2013, two companies, Leyard (right) and SiliconCore, showed systems with 1.9-mm pixel pitch, leaving the LED structure virtually invisible even at home viewing distances. Is “virtually” not good enough? SiliconCore also showed their new Magnolia panel, with a pitch of just 1.5 mm!

The Leyard display shown here (and at NAB) was so-called “4K,” with more than twice the number of pixels of so-called “Full HD” across the width of the picture. 4K also typically has 2160 active (picture carrying) lines per frame, twice 1080, so it typically has four times the number of pixels of the highest-resolution for of HD.

4K was unquestionably the major unofficial theme on the NAB show floor, replacing the near-ubiquitous 3D of two years ago. There were 4K lenses, 4K cameras, 4K storage, 4K processing, 4K distribution, and 4K displays. Using a form of the new high-efficiency video codec (HEVC), the Fraunhofer Institute was showing visually perfect 4K pictures

4K was unquestionably the major unofficial theme on the NAB show floor, replacing the near-ubiquitous 3D of two years ago. There were 4K lenses, 4K cameras, 4K storage, 4K processing, 4K distribution, and 4K displays. Using a form of the new high-efficiency video codec (HEVC), the Fraunhofer Institute was showing visually perfect 4K pictures  with their bit rates reduced to just 5 Mbps; with the approval of the FCC, that means it could be possible to transmit multiple 4K programs simultaneously in a single U.S. broadcast TV channel. But some other things in the same booth seemed to be attracting more attention, including ordinary HD images, shot by INCA, a tiny, 2.5-ounce “intelligent” camera, worn by an eagle in flight. The eagle is shown above left, the camera, with lens, at right. The seemingly giant attached blue rod is a thin USB cable.

with their bit rates reduced to just 5 Mbps; with the approval of the FCC, that means it could be possible to transmit multiple 4K programs simultaneously in a single U.S. broadcast TV channel. But some other things in the same booth seemed to be attracting more attention, including ordinary HD images, shot by INCA, a tiny, 2.5-ounce “intelligent” camera, worn by an eagle in flight. The eagle is shown above left, the camera, with lens, at right. The seemingly giant attached blue rod is a thin USB cable.

Throughout the show floor, wherever manufacturers were highlighting 4K, visitors seemed more interested in other items. The official theme of NAB 2013 was METAMORPHOSIS, with the “ME” intended to stand for media and entertainment, not pure self interest. But most metamorphoses seemed to have happened before the show opened.

Throughout the show floor, wherever manufacturers were highlighting 4K, visitors seemed more interested in other items. The official theme of NAB 2013 was METAMORPHOSIS, with the “ME” intended to stand for media and entertainment, not pure self interest. But most metamorphoses seemed to have happened before the show opened. ![]() Digital cinematography cameras aren’t new; neither are second-screen applications. Mobile DTV was introduced years ago. So was LED lighting.

Digital cinematography cameras aren’t new; neither are second-screen applications. Mobile DTV was introduced years ago. So was LED lighting.

There were some amazing new technologies discussed at NAB 2013 — perhaps worthy of the metamorphosis label. But they weren’t necessarily on the show floor (at least not publicly exhibited). Attendees at the SMPTE Technology Summit on Cinema (TSC), for example, could watch large-screen bright images that came from a laser projector.

The NAB show was vast, and the associated conferences went on for more than a week. So I’m going to concentrate on just one hour, a panel session called “Advancing Cameras for Cinema,” in one room, the SMPTE TSC, and how it showed the metamorphosis of what might be fixed in post.

Consider the origin of post, the first edit, and it was a doozy! It occurred in 1895 (and technically wasn’t exactly an edit). At a time when movies depicted real scenes, The Execution of Mary, Queen of Scots, in its 27-foot length (perhaps 17 seconds), depicts a living person being led to the chopping block. Then the camera was stopped, a dummy replaced the person, the camera started again, and the head was chopped off. It’s hard to imagine what it must have been like to see it for the first time back then. And, since 1895, much more has been added to the editing tool kit.

Consider the origin of post, the first edit, and it was a doozy! It occurred in 1895 (and technically wasn’t exactly an edit). At a time when movies depicted real scenes, The Execution of Mary, Queen of Scots, in its 27-foot length (perhaps 17 seconds), depicts a living person being led to the chopping block. Then the camera was stopped, a dummy replaced the person, the camera started again, and the head was chopped off. It’s hard to imagine what it must have been like to see it for the first time back then. And, since 1895, much more has been added to the editing tool kit.

It’s now possible to combine different images, generate new ones, “paint” out wires and other undesirable objects, change colors and contrast, and so on. It’s even possible to stabilize jerky images and to change framing at the sacrifice of some resolution. But what if there were no sacrifice involved?

The first panelist of the SMPTE TSC Advancing Cameras session was Takayuki Yamashita of the NHK Science & Technology Research Labs. He described their 8K 120-frame-per-second camera. 8K is to 4K approximately as 4K is to HD, and 120 fps is also four times the 1080i frame rate. This wasn’t a theoretical discussion; cameras were on the show floor. Hitachi showed an 8K camera in a familiar ENG/EFP form (left); Astrodesign showed one dramatically smaller (right).

The first panelist of the SMPTE TSC Advancing Cameras session was Takayuki Yamashita of the NHK Science & Technology Research Labs. He described their 8K 120-frame-per-second camera. 8K is to 4K approximately as 4K is to HD, and 120 fps is also four times the 1080i frame rate. This wasn’t a theoretical discussion; cameras were on the show floor. Hitachi showed an 8K camera in a familiar ENG/EFP form (left); Astrodesign showed one dramatically smaller (right).

If pictures are acquired at higher resolutions, they may be reframed in post with no loss of HD resolution. With 8K, four adjacent full-HD-resolution images can be extracted across the width of the 8K frame and four from top to bottom. A shakily captured image that bounces as much as 400% of the desired framing can be stabilized in post with no loss of HD resolution. And the higher spatial sampling rate also increases the contrast ratio of fine detail.

Contrast ratio was just one of the topics in the presentation, “Computational Imaging,” of the second panelist, Peter Centen of Grass Valley. Above is an image he presented at the SMPTE summit. The only light source in the room is the lamp facing the camera lens, but every chip on the reflectance chart is clearly visible and so are the individual coils of the hot tungsten filament. It’s an extraordinarily high dynamic range (HDR); a contrast ratio of about ten million to one — more than 23 stops — was captured.

Yes, that was an image he presented at the SMPTE summit — five years ago in 2008. This year he showed a different version of an HDR image. There’s nothing wrong with the technology, but bringing it to the market is a different matter.

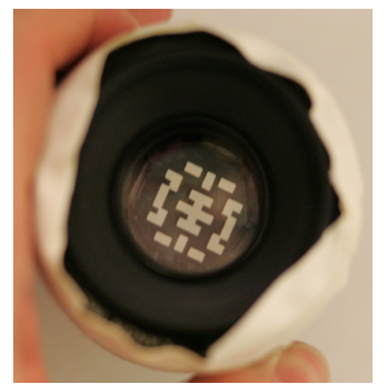

At the 2013 TSC, Centen showed an even older development, one first presented by an MIT-based group at SIGGRAPH in 2007 <http://groups.csail.mit.edu/graphics/CodedAperture>, a so-called “coded aperture.” Consider a point just in front of a camera’s lens. The lens might zoom in or out and might focus on something in the foreground or background. Its aperture might be wide open for shallow depth of field or partially closed for greater depth of field. If it’s a special form of lens (or lenses), it might even deliver stereoscopic 3D. All of those things might happen after the light enters the lens, but all of those possibilities exist in the “lightfield” in front of the lens.

At the 2013 TSC, Centen showed an even older development, one first presented by an MIT-based group at SIGGRAPH in 2007 <http://groups.csail.mit.edu/graphics/CodedAperture>, a so-called “coded aperture.” Consider a point just in front of a camera’s lens. The lens might zoom in or out and might focus on something in the foreground or background. Its aperture might be wide open for shallow depth of field or partially closed for greater depth of field. If it’s a special form of lens (or lenses), it might even deliver stereoscopic 3D. All of those things might happen after the light enters the lens, but all of those possibilities exist in the “lightfield” in front of the lens.

There have been many attempts to capture the whole lightfield. Holography is one. Another, used in the Lytro still camera, uses a fly’s-eye type of lens, which can cut into resolution (an NAB demonstration a few years ago had to use an 8K camera for a low-resolution image). A third was described by the third panelist (and shown in his booth on the show floor). The one Centen showed requires only the introduction of a disk with a pattern of holes into the aperture of any lens on any camera.

There have been many attempts to capture the whole lightfield. Holography is one. Another, used in the Lytro still camera, uses a fly’s-eye type of lens, which can cut into resolution (an NAB demonstration a few years ago had to use an 8K camera for a low-resolution image). A third was described by the third panelist (and shown in his booth on the show floor). The one Centen showed requires only the introduction of a disk with a pattern of holes into the aperture of any lens on any camera.

Here is just one possible effect on fixing things in post, with images from the MIT paper. It is conceivable to change focus distance and depth of field and derive stereoscopic 3D from any single camera and lens combo after it has been shot (click on images to enlarge).

Here is just one possible effect on fixing things in post, with images from the MIT paper. It is conceivable to change focus distance and depth of field and derive stereoscopic 3D from any single camera and lens combo after it has been shot (click on images to enlarge).

The moderator’s introduction to the panel showed a problem with higher resolutions: getting lenses that are good enough. He showed an example of a 4K lens (with just a 3:1 zoom ratio) costing five times as much as the professional 4K camera it can be mounted on. Centen offered possibilities of correcting both lens and sensor problems in post and of deriving 4K (or even 6K) from today’s HD sensors.

The third panelist, Siegfried Foessel of the Fraunhofer Institute, seemed to cover some of the same ground as did Centen — using computational imaging to derive higher resolution from lower-resolution image sensors, increasing dynamic range, and capturing a lightfield, but his versions used completely different technology. The higher resolution and HDR can come from masking the pixels of existing sensors. And the Fraunhofer lightfield capture uses an array of tiny cameras not much bigger than one ordinary one, as shown in their booth (right). Two advantages of the multicamera approach are that each camera’s image looks perfect (with no fly’s eye resolution losses or coded-aperture light losses) and that the wider range of lens positions also allows some “camera repositioning” in post (without relying on Project FINE processing).

The third panelist, Siegfried Foessel of the Fraunhofer Institute, seemed to cover some of the same ground as did Centen — using computational imaging to derive higher resolution from lower-resolution image sensors, increasing dynamic range, and capturing a lightfield, but his versions used completely different technology. The higher resolution and HDR can come from masking the pixels of existing sensors. And the Fraunhofer lightfield capture uses an array of tiny cameras not much bigger than one ordinary one, as shown in their booth (right). Two advantages of the multicamera approach are that each camera’s image looks perfect (with no fly’s eye resolution losses or coded-aperture light losses) and that the wider range of lens positions also allows some “camera repositioning” in post (without relying on Project FINE processing).

Foessel also discussed higher frame rates (as did many others at the 2013 TSC, including a professor of neuroscience and an anesthesiologist). He noted that capturing at a high frame rate allows “easy generation of different presentation frame rates.” He also speculated that future motion-image programming might use a frame rate varying as appropriate.

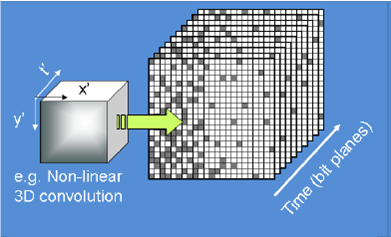

The last panelist was certainly not the least. He was Eric Fossum from Dartmouth’s Thayer School of Engineering, but he was introduced more simply, as the inventor of the modern CMOS sensor. His presentation was about a “quanta image sensor” (QIS) containing, instead of pixels, “jots.” The simplest description of a jot is as something like a photosensitive grain from film. A QIS sensor counts individual photons of light and knows their location and arrival time.

The last panelist was certainly not the least. He was Eric Fossum from Dartmouth’s Thayer School of Engineering, but he was introduced more simply, as the inventor of the modern CMOS sensor. His presentation was about a “quanta image sensor” (QIS) containing, instead of pixels, “jots.” The simplest description of a jot is as something like a photosensitive grain from film. A QIS sensor counts individual photons of light and knows their location and arrival time.

An 8K image sensor has more than 33 million pixels; a QIS might have 100 billion jots and might keep track of them a thousand times a second. The exposure curve seems very film-like. Fossum mentioned some other advantages, like motion compensation and “excellent low light performance,” although this is a “longer-term effort” and we “won’t see a camera for some time.”

The “convolution window size” (something like film grain size) can be changed after image acquisition. In other words, even the “film speed” will be able to be changed in post.