NBA Virtual-Reality Broadcasts on Oculus Fueled By Fully Cloud-based Decentralized Workflow

Through The Switch and AWS, MediaMonks takes live VR sports production into the cloud

Story Highlights

In a challenging season of evolving COVID-based protocols, limited fan attendance, and national broadcast partners’ taking much of their live game production remote, the NBA has managed to uphold its reputation as one of the nation’s most progressive professional sports leagues.

That includes leading the way on NFTs (through NBA Top Shot), sports-betting–based alternative streaming broadcasts, and, yes, even virtual reality.

The NBA has partnered with Facebook to deliver a package of live games in virtual reality on the Oculus platform.

Through its partnership with Facebook, the NBA has delivered a package of 10 VR broadcasts of live games on the Oculus Quest platform, the last of which — between the Toronto Raptors and Dallas Mavericks — will stream Friday evening.

Consumable on Oculus Quest’s Venues app, this season’s edition of the NBA’s live VR experience brings users (and their dynamic avatars) into a suite-like experience to interact with other fans of their choosing while watching a produced, multi-camera live presentation of the game itself.

Yet, although the consumer side of the production has been advanced significantly by pushing the quality of the video to 4K at 60 fps, perhaps the most radical and impactful changes are behind the scenes, where the entire live experience is being produced via a new decentralized model with the crew working from numerous locations around the globe.

The Key First Mile to the Cloud

The NBA and Facebook have entrusted international creative digital-production company MediaMonks to produce the live game coverage and assist with marrying it to the Venues app experience within Oculus.

Camera specialist Alan Bucaria sets up one of four cameras used to shoot the VR broadcast. Bucaria is one of just two-three people required at the arena to make the production possible. (Photo Courtesy: MediaMonks)

MediaMonks’ team has logged plenty of reps working NBA VR experiences in the past, but, when it became clear that conditions at NBA arenas were going to be quite different this season given careful resumption of play amid the coronavirus pandemic, it was understood that this slate of productions would be the most challenging yet.

Unable to travel staff and have a large technical presence onsite, MediaMonks had to get creative. Like many broadcasters throughout this pandemic, it would need to find a way to produce from home — literally. The agency would assemble a completely decentralized workflow that would allow the entire production to be done in the cloud.

The key to decentralization came in unlocking the connectivity. MediaMonks turned to The Switch for its high-capacity, low-latency first-mile connectivity into every NBA arena. This enabled The Switch to extend a direct peering with AWS via a private Direct Connect which MediaMonks accessed directly from the NBA Venue panel. The ability to funnel 4K video signals over those pipes (The Switch network) out of the arena and into the cloud was the critical element that enabled the entire show.

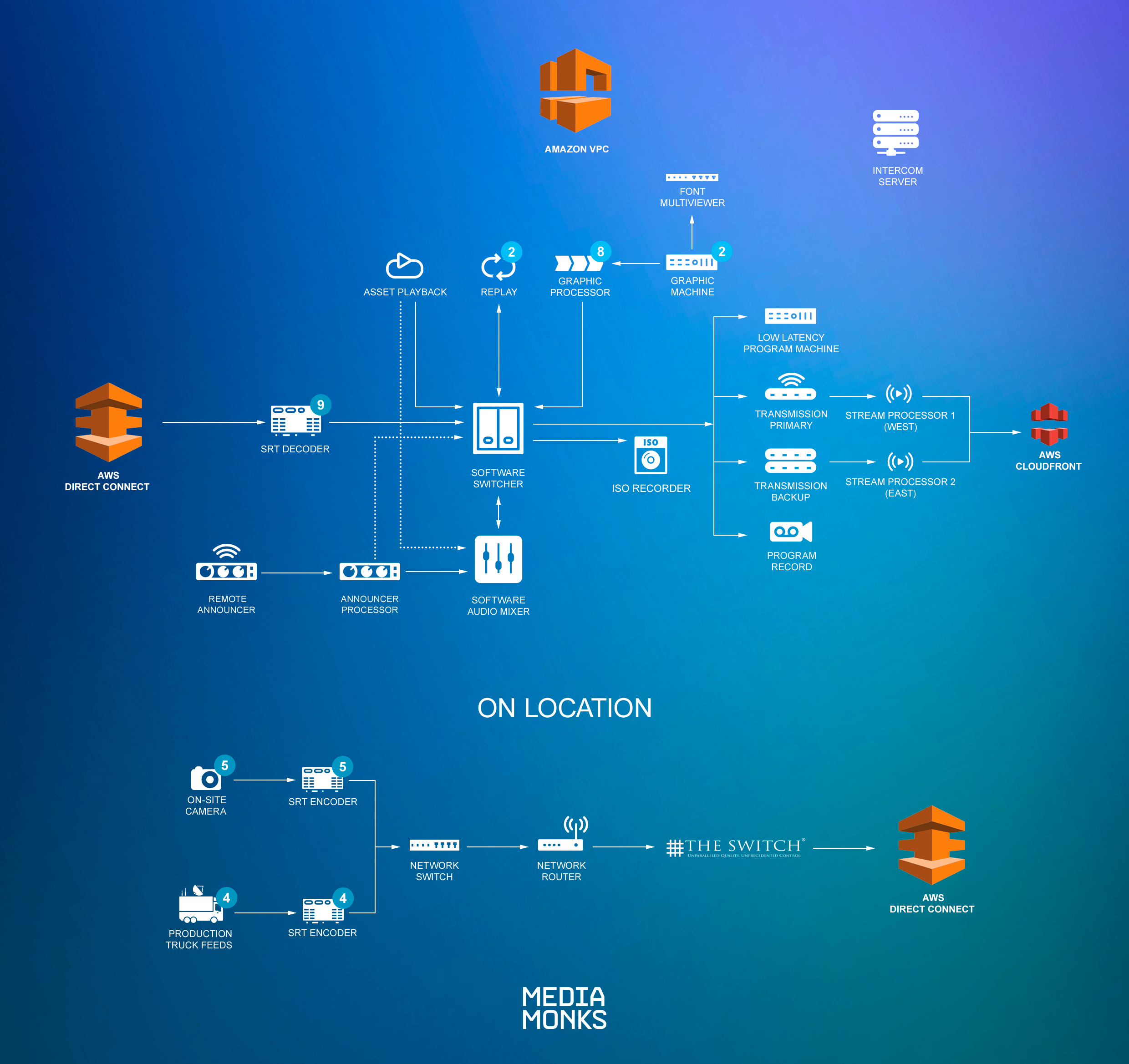

The signal workflow is relatively straightforward (see image at right). From AWS Direct Connect, the camera signals are fed through nine softwareSRT decoders into TouchDesigner for 3D mapping and custom projection. From there, New Blue’s Titler Live was used for graphics and then brought into Vizrt’s Viz Vectar Plus production-switching system – controlled by Central Control – where the crew can tap in and work from their respective homes to build the final product, which is transmitted to Oculus headsets through AWS Cloudfront.

“This workflow sounds terrifying and bizarre,” says Lewis Smithingham, MediaMonks’ Director of Creative Solutions, “but, once it got up and running, it has been one of the more stable shows that we’ve ever run.”

Minimal Onsite Presence

At the arena on a game night, the MediaMonks team has to deploy little more than a collection of five cameras and a small, 12 rack unit rolling case housing a collection of Haivision Makito X4 encoders.

The only gear required on-site for the production are five Sony cameras and a 12 RU rolling case of Haivision Makito X4 encoders. Those encoders leverage The Switch’s in-venue network to transport the camera feeds to AWS Direct Connect. (Photo Courtesy: MediaMonks)

With the team looking to stick with more-traditional broadcast elements, these games are not produced with some special VR rig, which previous major VR projects across the industry may have used. Instead, these games are shot using Sony FX6 full-frame cinema cameras outfitted with Canon EF 8-15mm fisheye lenses. They are positioned in four spots in the arena: high center court, low center court, and under each basket.

Only two to three crew members are needed onsite: VR Broadcast Engineer in Charge Ken Stiver and Camera Lead/Live Tech Alan Bucaria, supported by Broadcast Engineer Adam Roth, or a local utility. Everyone else involved in the show works on equipment in their own homes.

Says Smithingham, “It’s liberating as a manager to be able to recruit the talent that I want for this show, [rather] than be restricted to just who can get to the truck.”

Bringing in Talent Far and Wide

The show is steered by a crew more than 15 people deep, all working from home in locales ranging from Orlando to New York, New Jersey, Los Angeles, San Francisco, Arkansas, London, Nottingham, Buenos Aires, and São Paolo.

Among those behind the scenes were Solutions Architect Lead Jason O’Malley, Broadcast and Films Senior Technical Manager Patrick Jones, Operations Director Rob McNeil, Consulting Engineer Devin Block, Event Producer, Project Manager Igor Evangelista, and Event Producer and Stage Manager Tim Walker, working in collaboration with The Switch’s Sales Director Meredith Wolcott and Director of Network Engineering Joseph Zarakas. The project was overseen by MediaMonks’ EVP/Global Head of Entertainment Eric W. Shamlin.

Show director Jamie LoFiego called the shots from this setup in his Orlando home. (Photo Courtesy: MediaMonks)

Calling the shots and cutting between the cameras during the game was Director/Show Producer Jamie LoFiego. Working on a multi-monitor setup at his home in Orlando, he comes from a traditional sports background, directing videoboard shows at the Tampa Bay Buccaneers’ Raymond James Stadium and the Orlando Magic’s Amway Center. He is working alongside Executive Producers Kevin Collinsworth and Paul Gallardo, Line Producer Andrew Orr, Assistant Director/Producer Myla Unique Minor, Technical Director Matt Johnson, Touchdesigner Producer Craig Pickard, Graphics Producer Andrew Kehrer, Graphics Operator/Producers DC Robbins and Mackenzie Bohall, Replay Operator Jamie Russell, A1 Wes Hovanec, IT Lead James Ce, and many others.

The experience even has exclusive broadcasters in play-by-play commentator Adam Amin and analyst Richard Jefferson, who are actually working out of The Switch’s Los Angeles-based production facility.

“If you had told me [we’d be doing this] a year ago, there would have been no way,” says Smithingham, noting that he finds it difficult to picture a world where he could get the budget and/or space in the venue for a full-fledged truck production to support a project of this nature now that this workflow has been proven. “This is such a different way to work, and, honestly, I don’t see myself going back.”

The schedule of NBA League Pass games on Venues on the Oculus Quest Platform concludes Friday with the Toronto Raptors at the Dallas Mavericks beginning at 9 p.m. ET.