ESPN’s Rose Parade Production Is First To Use Unreal Engine as End-to-End Live-Broadcast-Graphics Tool

Epic Games’ ‘Project Avalanche’ tool is part of new Unreal Engine 5 game-rendering engine

Story Highlights

Over the past half decade, Epic Games’ Unreal Engine game-rendering technology has brought an entirely new level of realism to live sports broadcasts — primarily through the use of real-time AR and virtual graphics. The technology took a major leap forward during the Rose Parade production on ABC on Monday: ESPN became the first to deploy Unreal Engine as an end-to-end live-broadcast-graphics tool. ESPN used the new “Project Avalanche” tool in Unreal Engine 5 to produce the entire graphics package from design to on-air playout.

“This is the beginning of a much larger journey with [Epic Games],” said Michael “Spike” Szykowny, senior director, animation, Graphics Innovation & Production Design, ESPN Creative Studios, prior to the parade. “The goal of that journey is ‘How can we take the amazingly cool stuff from the gaming world and bring it into the broadcast world?’ This production will use Unreal from end to end. You don’t get these types of ‘first’ opportunities very often, so it’s very exciting for us to be in on the ground floor. And I think the potential is endless.”

The broadcast served as a test of sorts for Unreal Engine’s Project Avalanche tool and marks the beginning of what both ESPN and Epic Games call a long-term collaboration.

“This is a new tool added to Unreal that we worked together with ESPN to vet out,” said BK Johannessen, business director, Unreal Engine, broadcast and live events, Epic Games. “It is very unlike what you typically have seen Unreal used for in the past. This has nothing to do with virtual or AR or tracking. Instead, we’re using Unreal for traditional overlay graphics but with the robust power of the Unreal Engine underneath. It offers a lot of capabilities that you don’t typically find in systems that do these types of overlays, which can make the process easier from both a design and an execution point of view.”

Bringing Real-Time Rendering to the Broadcast-Graphics World

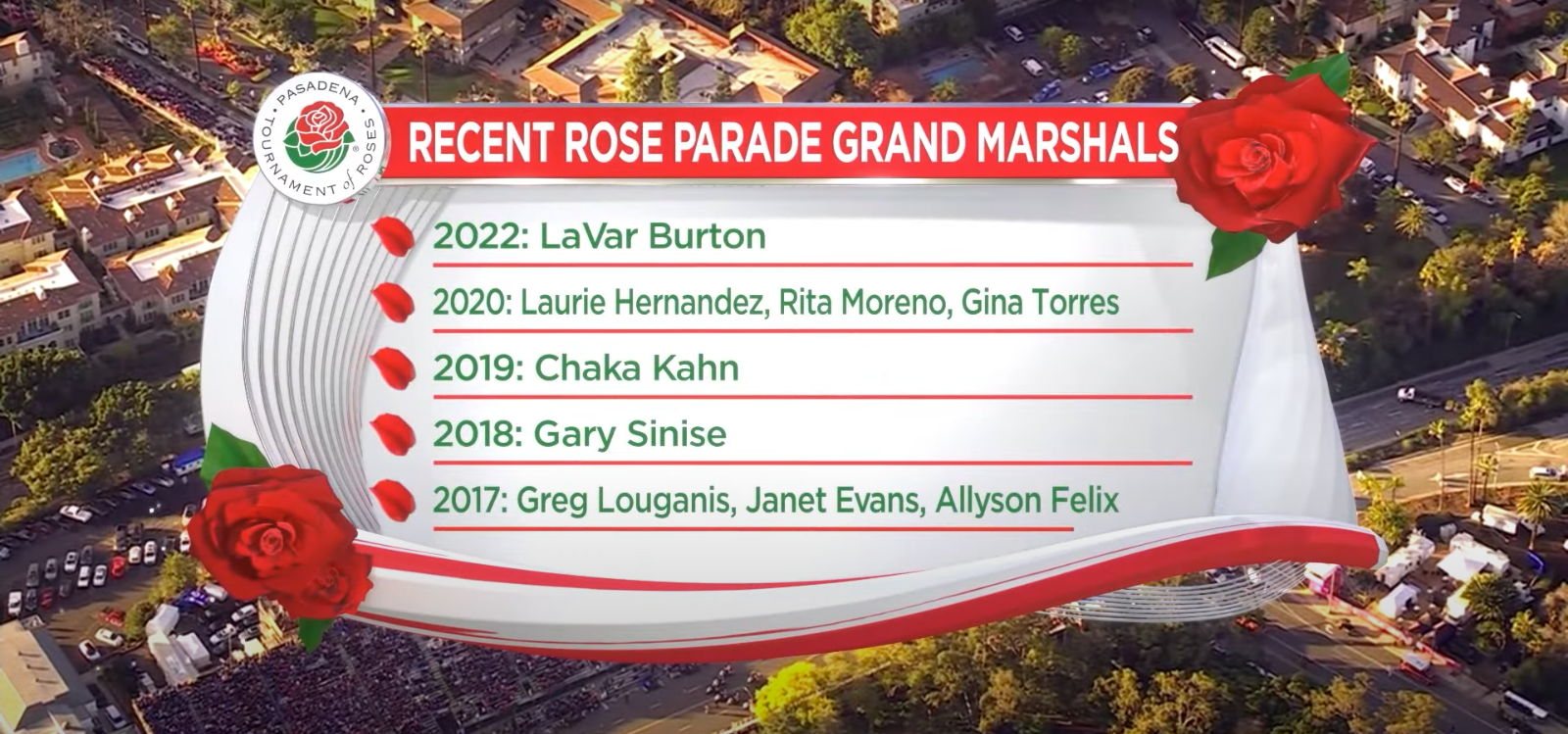

While Unreal Engine has become synonymous with AR and virtual elements within the broadcast community, it had yet to be deployed as an all-encompassing graphics-production tool for live broadcast. That changed with Monday’s Rose Parade when both the animation package (including the show open) and insert package were built entirely in Unreal.

“Most people are using Unreal for the big-spectacle–event things, but they’re not using Unreal for the more traditional day-to-day type of insert graphics,” said Andy Blondin, director, technical product management, Epic Games. “Essentially, Project Avalanche allows a broadcast designer familiar with [established broadcast] tools to use the power of Unreal Engine in the same manner that they would use to run graphics for a typical show. That has been one of the challenges for us at Epic: taking something that was built for the purpose of gaming and bringing it into broadcast. We’re finally at the point with this tool where you have all the broadcast workflows you need but with all the power of Unreal.”

According to Blondin, all the necessary tools are now available in Unreal for the various aspects of the graphics-production pipeline, including design, rigging, templating, key and fill, and more. However, with Unreal, these 2D and 3D graphics can be rendered in real time with higher fidelity than with traditional graphics systems.

“From a technical perspective, a lot of pre-rendered video clips end up getting played back through these [traditional broadcast] systems,” said Blondin. “There’s not a lot of flexibility to modify anything on the fly; you get locked in pretty early. But, for this show, we’re doing full 3D geometry of everything that’s animated. There are no video clips being played out in the broadcast. We have the roses blooming and full animations playing out live. We can art-direct it and be able to change all the lighting and particles on the fly.”

ESPN Supervisor, Motion Graphics, Dana Drezek and Senior Real-Time Motion Graphics Designer Jason Go oversaw the project — in collaboration with Epic Games — from the initial design to playout in the truck on Monday. The operator interface in the truck featured all the typical aspects of a traditional graphics-playout system, including a playlist tool, show rundown, preview and program sources, key and fill monitoring, and tool for typing in text for graphics.

“We want to shorten the learning gap and make it as easy for someone who knows those typical tools to come over and use Unreal,” said Blondin.

Unreal Makes the Rose Parade Bloom Like Never Before

The ESPN-Epic Games partnership came about during the 2022 SVG Sports Graphics Forum, Szykowny says, when he approached Blondin after seeing his presentation on Unreal Engine’s evolving role in the broadcast ecosystem.

“Andy and I had never met before, so I introduced myself and said we should be working together and I want to be the first one to do this because it’s very intriguing,” Szykowny said. “To see this come from me hounding down Andy backstage at an SVG [event] to getting it on-air in just a few months has been pretty amazing to watch.”

ESPN selected the Rose Parade as the inaugural “all-Unreal” broadcast since it is light on real-time graphics that require data feeds and player/team statistics. The data-light production not only provided an ideal testing ground but is also a broadcast filled with beauty and pageantry that Unreal Engine could bring alive for viewers in a new way.

“While the beauty of the tool is phenomenal,” said Szykowny, “the technical end is just as important. We need to be sure that, once we get in a live truck, the director and producer are going to have everything they need and there aren’t any hiccups. So we put [Unreal] through the paces to make sure that everything works in the way that it needs to so that there is zero sacrifice on the production end.”

ESPN and Epic worked together to create an entirely new identity package for the Rose Parade, including both insert graphics and animations — all using Unreal Engine. Szykowny said Unreal enabled “a more photorealistic look inspired by the beautiful roses and the pageantry around Pasadena. That was a huge influence on the design.”

Looking Ahead: Unreal Not Looking To Be ‘the Holy Grail of Broadcast-Graphics Tools’

Epic Games will now look to what’s next for Project Avalanche, which was the code name during development because the platform has yet to be formally branded. Although the Rose Parade production was an Unreal Engine-driven show from end to end, Johannessen emphasized that Project Avalanche’s strong API will allow traditional broadcast-graphics vendors to integrate it into their respective systems.

“We are engaged with several of the more traditional broadcast vendors to have it integrated into their playout and operator tools,” he explained. “We don’t envision that we are going be the holy grail of broadcast-graphics tools. But it is a fantastic suite of tools that allow a designer to test everything end to end, including the playout part of it, which has sometimes been cumbersome in the past. We are definitely working with third parties in terms of supplying their own tools as well as more custom-type solutions.”

As for ESPN’s next steps in its Unreal evolution, Szykowny said he could foresee its being used for a variety of sports broadcasts in the future but, “quite honestly, all of our focus is on making sure this first show goes well. After that, we’ll sit down and have a post-mortem talk about things and then figure out like next steps and where it goes from there. One thing I know for sure: tools like this will just help us get better.”

CLICK HERE for more on ESPN’s Rose Parade production this year.