ESPN, DMED Pull Off First End-to-End Cloud-Based Live-Game Production With A-10 College Hoops Game

The Davidson–George Mason game is believed to be the first live end-to-end-cloud production of a U.S. sporting event to be broadcast on linear TV

Story Highlights

ESPN and Disney Media & Entertainment Distribution (DMED) continued to push the boundaries of cloud-based production on Jan. 14, when they teamed up on the first-ever fully cloud-based live-game production in the U.S. during the Davidson–George Mason Atlantic 10 Conference basketball game in Fairfax, VA.

Feeds from onsite cameras and microphones were sent directly into an AWS cloud instance, where software-based production tools were running virtually. Meanwhile, operators used control surfaces in a control room at ESPN’s Bristol, CT, campus to produce the show (which was broadcast on ESPN3) remotely. The software-based approach not only reduced costly hardware and physical infrastructure but also eliminated transmission bottlenecks associated with hardware-based REMI productions.

Operators on hand in Bristol: (from left) Director 1 Ashley Ward, Senior Associate Producer Greg Burud, Media Replay Operator 1 Maddie Boccardi

“We see this as a major step forward in our evolution as it related to cloud-based production,” says Chris Strong, director, specialist operations, Disney Media & Entertainment. “The beauty of cloud is, we can take the REMI model or any type of traditional model and be able to make it a far more scalable solution. In a traditional fixed facility, you build a set amount of rooms that is the total rooms you have available. If you want to scale that up, you’re going to have to build more brick-and-mortar facilities. But, with the cloud, you can take all those resources and send them to a cloud instance, which allows you to scale almost infinitely.”

Building the On-Ramp to Distributed Production in the Cloud

The proof of concept comes on the heels of ESPN/DMED’s recent successful deployment of a cloud-based workflow for super-slo-mo replay for REMI productions and marks a major step forward in their efforts to create a sustainable cloud-based live-production model. Although the production crew was located in Bristol for the initial production, ESPN believes this cloud-based production model will eventually allow its production workforce the flexibility to work from anywhere.

“The next generation of this [model] will move to a distributed workflow where our operators can work from home,” says Strong. “The goal is to build an efficient, scalable, and sustainable model so that, when our [ops team] has two college-basketball shows to cover at once, we could spin up a [cloud] instance instead of using resources in one of our fixed facilities. We also would be handing a single program output to the facility versus trying to accommodate eight to 10 more individual resources like in a REMI or REMCO [model].”

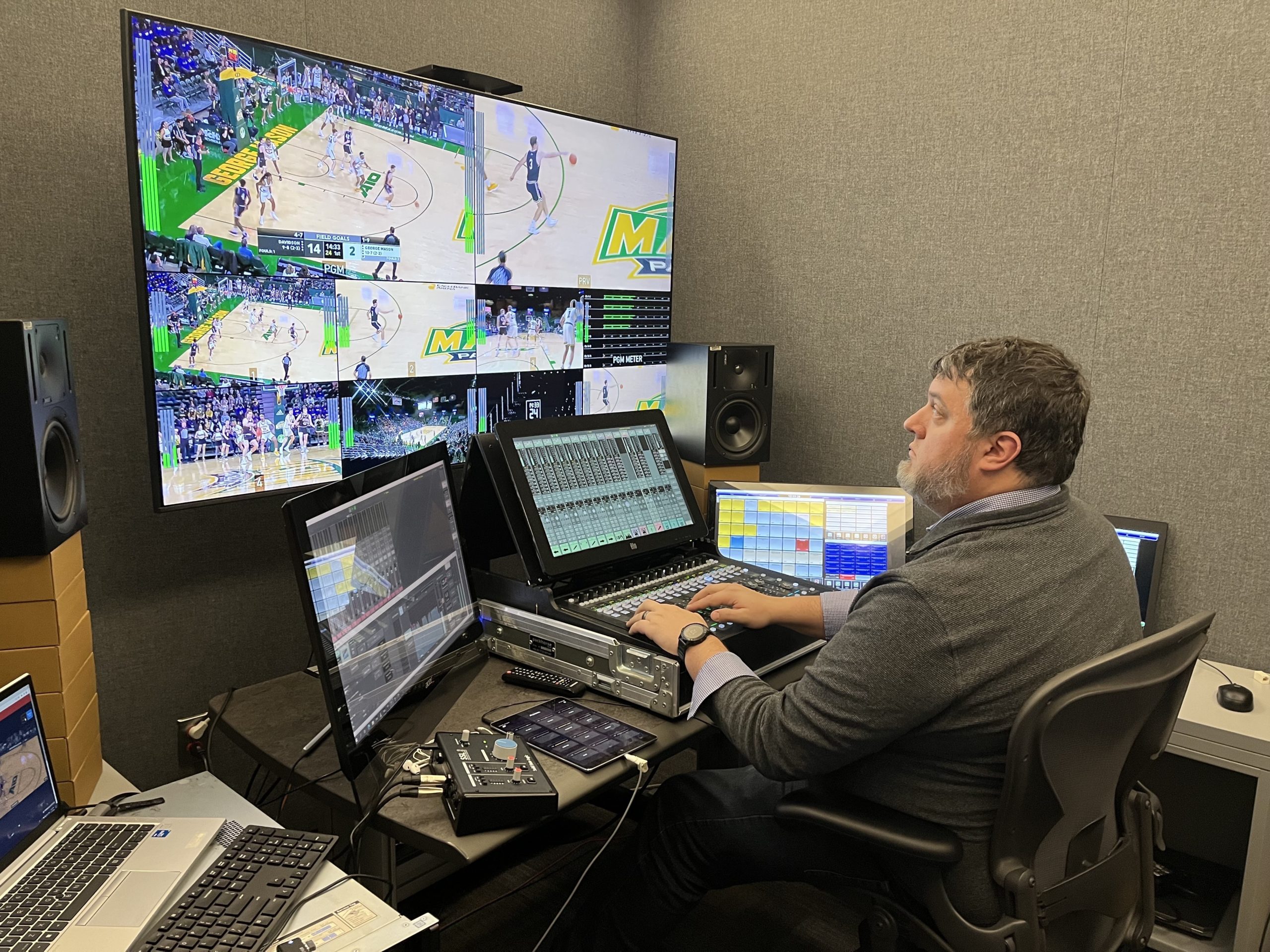

Senior Audio Operator Cody Hardin in Bristol uses an SSL audio-mixing control surface for the cloud-based production.

Live Cloud Production From Beginning to End

During the production, ESPN successfully tested video switching, replay, graphics, multiviewer generation, audio mixing, comms, file transfer, and transmission QC in the cloud.

“All the typical things that we would normally have within the facility to produce the show were running in the cloud,” says Daniel Lannon, manager, REMI operations, DMED. “Then we had the director/TD, replay operator, producer, audio operator, comms specialist, and our graphics operators and graphics producers all located within the facility. They were all controlling the [technology] that was running in the cloud data center from [control surfaces] in Bristol.”

Graphics Interface Coordinator Keith Rall

The cloud-based operation was based on a ViBox all-in-one production video switcher and slo-mo replay system from Simplylive (now owned by Riedel Communications). The team also used a Simplylive Venue Gateway onsite encoder/decoder and multiviewer for IP encoding of all the camera and audio feeds and transported them to an AWS cloud instance using SRT.

All the necessary tools for the live production were running in the cloud, including Vizrt’s Viz Trio, Viz Engine, and Clock & Score Control Interface for graphics; Scoreboard OCR (optical character recognition) for clock-and-score data extraction; Solid State Logic audio mixing (with a 16-fader console surface in Bristol); Unity Connect audio comms; Audinate Dante Domain Manager; ENCO DAD audio-asset–management and –playout; Avsono audio NDI gateway and processing; Sienna ND NDI routing, processing, and multiviewer generation; and Signiant high-speed file transfer.

“Quality and the expectations from the production team were key,” says Strong. “We did not give up any level of production that would have been done in the hardware brick-and-mortar facility by putting this in the cloud. It has exactly the same quality with the same level of resources as the REMI ViBox model would have been. Our production was afforded everything they’re used to in our normal REMI model: switching, audio, graphics, video, camera isos, replay, scorebug, comms, and even talent onsite.”

Added Challenges: Announcers Onsite and Preparing a Backup Plan

With ESPN’s announcers at EagleBank Arena calling the action, all camera and audio feeds had to go into the cloud, the show was cut in Bristol, and the mix-minus audio, program feed, and tallies went back to the arena for the announcers via the Simplylive Venue Gateway and Unity Connect.

As a redundant backup plan, ESPN also took four isolated camera feeds directly into a separate AWS cloud instance equipped with small-switching and audio-mixing capabilities. If there was a failure in the primary cloud-based production chain, ESPN would have been able to subswitch the live broadcast from that cloud instance.

“In terms of operator experience,” says Lannon, “it was extremely reliable due to our connectivity between Bristol and the data center [in Virginia] and the fact that we are leveraging SRT for video transport, which beats satellite in terms of [latency]. The operators, director, and producer all walked away saying it felt just like they were operating while sitting in the mobile unit onsite. There was no reduction in functionality or experience.”

Media Replay Operator 1 Maddie Boccardi

On the Horizon: ‘Truly Sustainable, Supportable, Scalable Workflow Long Term’

Lannon expects new transmission efficiencies for ESPN’s fixed facilities with the cloud-based production model.

“This is also going to create efficiencies when we have multiple games within a single event or series or multiple presentations within a single production, which we are seeing more often,” he explains. “In this model, we deliver only a single program feed to Bristol transmission instead of multiple feeds [as] in a REMI model. Beyond the fact that you are saving a production-control room or a mobile unit, you are also cutting down on infrastructure to handle all those feeds coming in. That is going to allow us to scale up and scale down as we face these busy production windows and concurrency of different sports.”

Although Strong and Lannon say that further refinements will be necessary to make the cloud-based production model more scalable and repeatable, they agree that it is ready to move forward in the development process.

“I think we’re ready to take the next step, which will involve working with all the different departments and partners across the entire [DMED ecosystem] to check all the boxes from end to end,” Strong says. “That includes network security, production, engineering, operations, and everything in between. We want to take it from proof of concept to build a truly sustainable, supportable, scalable workflow long term.”

CORRECTION: A previous version of this article stated that this was the first end-to-end cloud-based live production, which was not accurate. While other pure-cloud productions have been completed in the U.S. (including the NBA’s VR broadcasts on Oculus), the Davidson–George Mason game is believed to be the first live end-to-end-cloud production of a live sporting event in the U.S. to be broadcast on linear television.