SVG Tech Insight: Cloud-based Remote Editing for Sports Organizations

This fall SVG will be presenting a series of White Papers covering the latest advancements and trends in sports-production technology. The full series of SVG’s Tech Insight White Papers can be found in the SVG Fall SportsTech Journal HERE.

Traditionally, there have been two approaches to remote editing. First, “proxy” or “low-res” editing, where editors use specialized editing clients that utilize lower resolution, and therefore bandwidth. After the edit, projects are either sent to “craft” edit clients, such as Adobe Premiere Pro, linking back to the high-resolution material, or a new clip is created, usually by a server-side render engine based on the high-res material. This approach has many merits, especially when used for journalistic or highlight editing, which require only simple edits and/or voice over. But it is less suitable for other workflows that are relevant to the sports market as it offers fairly limited editing functions at lower quality, which limits the evaluation of sharpness or depth of field. Editing clients specialized on proxy editing, also do not offer the look, feel, and functions of craft editing clients.

A second approach, especially as high-bandwidth connections have become more widely available, has been to connect directly to the high-res storage with a craft edit client over a Virtual Private Network (VPN). However even with high speed broadband connections, this approach does not give users a local-like experience since the protocols that have to be used such as Simple Management Protocol (SMB), Apple Filing Protocol (AFP), and Network File System (NFS) are not really designed to operate via high-ping networks. This results in high potential for packet loss, which dramatically degrades their performance.

Even audio elements take valuable time for the client to analyze and generate wave forms, often resulting in users disabling useful functionality just to make editing practical.

An alternative approach is cloud-based editing. Several different approaches have been taken here, including proxy streaming and cloud editing with live encoding, but both have trade-offs that mitigate strongly against any advantages they confer.

The considerations that have to be dealt with are as follows:

- Bandwidth: Since bandwidth is limited, production formats cannot be used directly for editing. While a proxy format is the obvious approach, the quality needs to be as close to the original file as possible.

- Scalability: A cloud solution provides the possibility to scale streaming servers based on the number of connected clients. Moreover, a public cloud solution hosted on the major platforms like Microsoft Azure or Amazon Web Services allows users to create streaming instances close to the clients’ location, resulting in lower latency.

- Training: Integrating a streaming solution into a commonly used editing application like Adobe Premiere Pro allows editors to work with well-known software and does not require to adjust to new clients or workflows.

- Security: Large cloud providers offer solutions to protect data and put a lot of effort into securing connections. Encryption algorithms, which are used in the Transport Layer Security (TLS), formerly known as Secure Sockets Layer (SSL), prevent third parties from reading and modifying any information transferred. Encryption needs to be applied to secure sensitive information or content, that is regulated by copyright.

Given these considerations, cloud editing based on a Media or Production Asset Management System (MAM/PAM) is an increasingly ideal fit for many sports remote editing use cases. It uses streaming servers and compressed video for playback in the editing client, but on top of that adds the resources of a MAM system to provide components such as pre-generated proxy video, metadata enrichment, and management of editing projects.

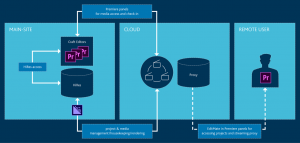

An example of a remote editing workflow in the cloud built on top of a MAM is as follows, which shows an overview of a hybrid craft editing installation, extended with remote editing.

The main site contains a “classic” setup of on-premise based craft editing. Media assets are centrally stored on a Hires-Storage. These files are accessed by local Adobe Premiere installations. The craft editor imports the video assets to its bin, edits a sequence, and renders it via the local Adobe Media Encoder, in order to create a new asset. All benefits (and limitations) of an on-premise solution remain.

On top of this, there is a cloud solution extending the range of editing functionalities to remote locations. A project and media management solution is hosted in the cloud, which enables the local and remote editor to search and browse for centrally managed assets. The managed assets can be stored in the cloud or in the on-premise storage. The proxy, which has been created from the HiRes source files is also located in the cloud. This also applies to the streaming server. The streaming server accesses the proxy and streams it to the connected remote client’s for editing, review, etc.

Whereas the architecture might vary depending on the system scaling, a solution hosting HiRes and Renderer in a cloud environment is viable.

One important factor to note is that the selection of the proxy format used has significant influence on the perception of the edit experience since resolution and compression influence the availability to determine and evaluate quality or sharpness.

A proven proxy format standard that is useful here is SMPTE RDD25. It is an AVC “Long GOP” proxy with AAC audio, originally conceived to standardize low-resolution proxies for use with low-res editors, which has seen extensions to improve resolution, bandwidth, and audio capacity. Based on the HiRes source and using the Main instead of the Base profile, a proxy can be created that is still possible to encode faster than real-time (depending on the source file up to 70fps/s). The resulting H.264 with 6-10Mbit/s and 1920×1080 and 8 Stereo Audio tracks is close to the source video but meets the requirements of limited bandwidth. The proxy can be created in an mp4 container, which extends the interoperability or in a Material Exchange Format (MXF) container, which allows editing while the proxy is generated.

Ongoing codec developments mean that the H.264 component of this workflow can be further optimized. Furthermore, the quality is high enough that it can be used for the direct distribution of edited material to different platforms, especially social media.

The streaming protocol used is another fundamental element for cloud editing. The subjectively perceived experience of editing stands and falls with performance of playback and responsiveness, which is mainly driven by the performance of the streaming, and is a vital part of, for example, clips generation. Adobe Premiere allows for integration of custom-made proprietary importer plugins which handle the playback of the content.

A video may be divided into many files (chunks or segments), each containing only a few seconds of video at one extreme or stored in a single unchunked file at the other. With larger chunks it might happen that a single frame is requested, but two complete chunks are transported and decoded, because the frame is within a Group of Pictures (GOP), which is separated over two chunks. We have developed a proprietary protocol that utilizes a TCP connection. The implemented Premiere Importer functionality has been adjusted in order to transport only the exact required individual frames as they are requested by the client application. This allows fast scrubbing as well as fast forward and playback.

Producers — especially in sports and news use cases — frequently need to scrub through large amounts of video to find the elements they need for their project. When only those frames are downloaded that are needed for decoding, it will reduce latency in streaming, leading to a better user experience. An improvement is to support asynchronous send and receive of packages. Asynchronous frame requests improves response times. In an asynchronous frame request scenario, the client can send multiple requests at the same time while in parallel receiving all return information.

The latency depends on the quality of network and especially on the distance between streaming server and client. Therefore, the outlet of a cloud environment needs to be as close to the client as possible. The large hyper scalers AWS and MS Azure, with their distribution of data centers across the globe, provide scenarios where this requirement can be met. Equally, they enable the streaming server and storage to be located in the same availability zone, which is an important factor for the need to connect cloud storage to a streaming server with sufficient random-access performance. Coupled with the TCP-based solution’s ability to transfer frames to the exact byte, this helps solve the dichotomy of matching the need to utilize MXF’s editing growing files capability with the restrictions on its use with Object Storage.

There is no single change that will improve and accelerate cloud-based editing workflows to the extent that cloud-based editing becomes the norm in sports usage. What is needed is work in several areas including server-storage connectivity, the use of TCP, reduction in the number of frames transferred, improved streaming protocols, better handling of audio files, smart local caching, and more.