SVG Tech Insight: Optimizing Timing of Signals in IP Remote Production

This fall SVG will be presenting a series of White Papers covering the latest advancements and trends in sports-production technology. The full series of SVG’s Tech Insight White Papers can be found in the SVG Fall SportsTech Journal HERE.

Transforming sports coverage

Remote production is transforming sports coverage by revolutionizing the economics and the logistics of live event production. Where substantial outside broadcast production capabilities and staff were once needed onsite to produce the main feed, most of the production can now be done from the central facilities. This means fewer equipment and human resources are needed onsite, and a greater utilization of the resources in the central location can be achieved.

Remote production has been making high-profile sports events, such as the Super Bowl, the FIFA World Cup, and the Summer and Winter Games, more cost-effective to produce at a time when the cost of acquiring broadcasting rights is soaring.

Often forgotten, though, is that remote production also makes the coverage of events with smaller audiences (e.g. lower leagues or less common sports) commercially viable, thereby creating new revenues streams for broadcasters. For example, HDR — a service provider to the broadcast industry — used a remote production solution based on Nevion equipment and software to enable the professional coverage of Danish horse racing — not a sport that attracts a large audience, but one which can be profitably broadcast with the right production cost structure.

The world-changing COVID-19 pandemic in the first half of 2020 significantly accelerated the move to remote production due to the need to keep people involved as safe as possible.

Time is of the essence

A big and significant factor in the growth of remote production has been the availability of ever more cost-effective bandwidth that telecom service providers offer on their high-performance IP-based networks, which can be leveraged to better connect venues and central production.

Only a few years ago, the high cost of long-haul transport (over terrestrial or satellite links) was such that only a few high quality signals could be transported from (and indeed to) the venues. As a result, coverage had to be largely produced onsite with mainly the final program output transported to the central facility.

Now, however, it is often viable to transport all the flows needed to produce the coverage centrally, so remote production is replacing onsite production in many instances.

One key distinction between onsite production and remote production is timing, especially latency and signal synchronization. These aspects are covered in detail in the rest of this whitepaper.

Keeping a lid on latency

Live coverage requires signals to be transported with as little latency as possible across the whole production workflow.

When the coverage is largely handled on-location (e.g. with an OB truck), production is self-contained and, as result, latency is not an issue within the production environment. In this scenario, the transport back to the central location (contribution) needs to be prompt, but a reasonable delay can be tolerated — for example, the latency of satellite contribution with temporal compression can be several seconds.

With remote production, the connectivity between the venue and the remote central gallery forms a crucial part of the production, and latency needs to be kept as low as possible, close to that experienced in a local production environment.

Apart from the speed of light in fiber, every aspect of the signal transport needs to be optimized to minimize latency.

Transport optimization

Live remote-production workflows inherently involve the transport of signals over long distances, passing through edge equipment (e.g. for encoding and protection), routers, and processing equipment. The choice of technology used and the configuration of this equipment can make a significant impact on the overall latency being incurred.

Low latency compression

Despite the availability of more cost-effective transmission capacity, the demands of modern remote production in terms of the number of signals and the quality of these (4K/UHD, HDR, HFR, WCG) mean that, in many situations, some form of video compression is needed to reduce bandwidth requirements.

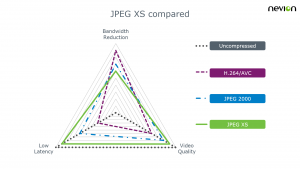

Video compression is always a compromise between image quality, compression rate, and latency. As noted above, low latency is crucial for remote production so traditional codecs like H.265, H.264, and, even more recently, JPEG 2000 with their total multi-frame end-to-end latency are not ideal for this.

New codecs have been developed that offer substantially reduced latency. For example, JPEG 2000 ULL (ultra-low latency) achieves a total end-to-end latency of around one frame, while keeping a compression ratio close to 10-to-1. TICO achieves an even lower latency (sub-frame) but offers a ratio of only 4-to-1, which is useful for squeezing 4K onto a 3G link, but not as optimum for bandwidth savings.

Most recently, JPEG XS has emerged as an ideal candidate for remote production. JPEG XS achieves pristine multi-generational compression with ratios of up to 10-to-1 and a latency of a tiny fraction of a frame.

A commercial implementation of JPEG XS has been available from Nevion since August 2019 and has successfully been used for live production; for example, by Riot Games, which remote-produced from Los Angeles the esport final of the League of Legends taking place in Paris — 9,000 km away!

Signal synchronization

An inherent part of live production is the requirement for signals from all sources to be synchronized. When doing onsite production, a local timing reference is distributed and used by all equipment.

Signal synchronization in an IP remote production environment presents its own challenges because it involves multiple geographically diverse locations (e.g. the venue and the central ‘at home’ production location).

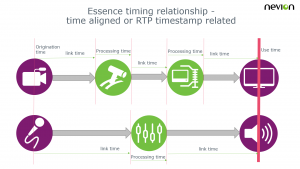

The locations clearly need to be frequency aligned (typically using a GNSS reference at each location) and phase-aligned, to enable the transit delays to be compensated for via appropriate buffering in the IP media edge (IPME) where the central production is taking place.

Unlike native SDI and AES3, which only implicitly convey relative time, SMPTE ST 2110 has the inherent capability of defining absolute time through the RTP timestamp being referenced to (GNSS derived) PTP. This means that each essence has a timestamp relating to the point of capture, which allows alignment to take place at any point in the workflow with total certainty — a real benefit in production.

However, leveraging that advantage is currently hampered by the fact that, while some appliances like Nevion’s software-defined media node (Virtuoso) honor the maintenance of the origination timing with the RTP time-stamps, many pieces of equipment don’t, meaning that this absolute reference is lost.

A recent revision of SMPTE ST 2110-10 is now encouraging the practice of maintaining origination timing through the production chain and hopefully more manufacturers will adopt this approach.

Time domain correction

The broadcasting industry’s approach to live production has traditionally been the that the production team needs to be handling signals in precisely the same time-domain, with no perceptible delay between capture by the cameras and microphones, and the production gallery. The previous sections of this whitepaper have also followed this assumption.

However, recent tools like augmented reality (AR) have shown that live production chains can in fact deal with noticeable delays and with different parts of a production gallery working in offset time domains.

Building on this observation, some solutions are emerging that embrace delay as part of a trade-off to minimize the cost of transit bandwidth. The approach used involves running the remote gallery production at home on proxy images (i.e. not using the full-resolution flows) in a delayed time-domain, while the full-resolution processing remains onsite. The time-offset vision and audio controls from the gallery are retrospectively applied onsite to buffered versions of the full resolution signals back at the origination site, with timing compensation to account for the transport and processing delays. This approach means that there is no need to transport all the full-resolution flows from the site to the central facilities, resulting potentially in substantial bandwidth (and cost) savings.

This time domain correction approach will become more widespread over time, especially with cloud-based live production, which will be introducing latency into the production flow.

Towards distributed production

IP remote production is just one part of a more comprehensive move towards distributed production, where all the production resources (studios, control rooms, datacenters, OB Vans, equipment, cloud processing, and even people) are connected via IP networks and can be shared across locations to create content. Distributed production not only brings cost savings through better usage of the resources, but also enables a total rethink of workflows — unrestricted by geography. In that sense, distributed production is the big prize the move to IP.

Like for IP remote production though, getting timing issues right are fundamental the success of distributed production.