ESPN, DMED Break New AR Ground With Real-Time Data Visualization on Monday Night Football

New real-time AR telestrator tool takes data-driven storytelling to a new level

Story Highlights

From the Emmy Award-winning K-Zone 3D to the 3D spray charts that revolutionized how fans watch the Home Run Derby, ESPN and Disney Media & Entertainment Distribution (DMED) have stretched the barriers of what’s possible in terms of visualizing data in a live baseball broadcast. Last week, for the first time, ESPN and DMED brought this data-fueled augmented-reality technology to the gridiron on Monday Night Football.

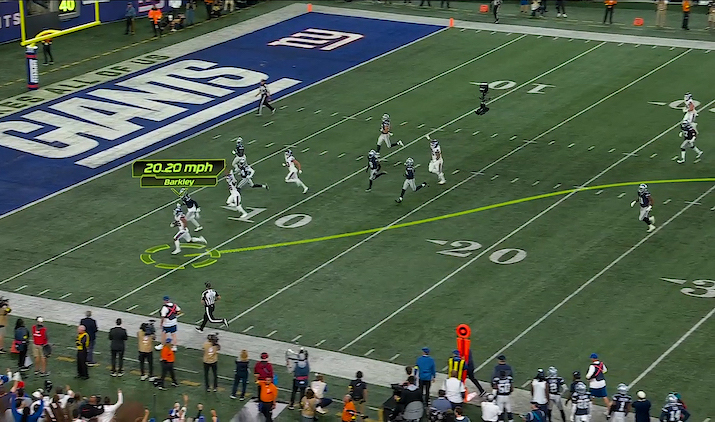

After roughly two years of development, last Monday’s Cowboys-Giants MNF game featured the launch of what could be described as a “real-time, data-driven AR telestrator.” It appeared for the first time on-air to showcase the precise speed of Giants running back Saquon Barkley on a breakaway 36-yard touchdown run. Just after Barkley scored, viewers saw a replay of the run with an AR pointer graphic showing his running path and his exact speed at any given second as he scampered away from Cowboys defenders toward the end zone.

DMED’s real-time AR data-visualization tool debuted during the Monday Night Football broadcast Sept. 26.

“We view this as a really important milestone from a technical standpoint because it is live data-driven spatial AR,” says Kaki Navarre, VP, software engineering, DMED. “That’s a much bigger deal than any one graphic. It’s technologically meaningful, and we are just scratching the surface of how this could be used to enrich the viewing experience for fans. From a technology standpoint, we have the capability to do things that have never been done before, and we couldn’t be more excited.”

How It Works: A Symphony of Next-Gen Tech in Action

The system uses proprietary DMED software and tools to sync live player and game data from the NFL’s Next Gen Stats (NGS) platform with SMT camera telemetry and live video to provide a real-time, player-specific statistical visualization. As of now, these AR replays are available on both the Skycam and All-22 angle, but other cameras could be added in the future.

Deploying Epic Games’ Unreal Engine and Pixotope’s AR-creation technology, the DMED team can create real-time graphics that visually showcase live data. Most notably, these segments are available to the MNF production team in two seconds or less, enabling them to be used in the broadcast as first replays.

Early in development, the technology functioned as a CG application before DMED transitioned it to a full AR ecosystem. At the end of the 2021-22 NFL season, the DMED team completed onsite tests of the technology for director Jimmy Platt and producer Phil Dean, who were instantly enthusiastic about what it could mean for this season’s MNF broadcasts.

“They took a look at it and got very excited,” says Principal Real-Time Artist Brian Rooney, who helped to lead the project. “This year, we have been invited to be on the truck full-time to roll this out and scale it up as the season goes on. This Saquon play was our first execution, and we plan to see a lot more moving forward.”

Rooney and Principal Software Engineer Jeffrey Bradshaw will be the operators in the truck tonight, using a touchscreen interface on the MNF production. Prior to each play, the operators click on the players of interest — quarterback, running back, receiver — and the system creates automated AR highlights based on the player’s position and Next Gen Stats data. Stats cover anything from the speed of a player on a TD run to the separation between a receiver and defensive back on a key catch to how quickly defenders rush the passer on a sack.

“For every single highlight that we create,” says Rooney, “the goal is to have the viewer understand something that they couldn’t see otherwise in 3-5 seconds. No lower-third graphic or explanation necessary; they understand what’s happening right away.”

Storytelling: Changing Data Visualization for Both Live and On-Demand Experience

ESPN and DMED believe this technology unlocks previously impossible storytelling opportunities through real-time statistical graphics visually showcasing live data on the field.

“There are two main ways this helps enhance storytelling,” says Navarre. “One is that it allows folks who may not as easily grasp advanced statistics to understand the details [because they] can see [the data] in real spatial dimensions. The other is obviously advanced stats for hardcore fans, who already understand those concepts; we can now take them a level deeper.”

ESPN sees this as not only a new opportunity to enhance how fans see statistical data in the live linear game broadcast but also on its sprawling portfolio of studio shows and digital platforms.

“Everything is being architected for both live and on-demand from the ground up,” says Christiaan Cokas, director, software engineering, DMED. “The team has done a great job getting the data embedded into the video so it can be easily scrubbed back and forth. Our team is working on a platform to let you go into that game, mark up a video, and output that for highlights.

“In addition to being part of a live game [broadcast],” he continues, “our system can let editors and producers of other shows go into the Monday Night Football game and grab a play, mark that up, and use that as well in their packages.”

Cokas adds that this technology also opens up a whole new universe of possibilities for more personalized and interactive experiences for fans.

“When you have an [operator] with a touchscreen in their hands and they can just click on a player and get a unique experience,” says Cokas, “you can’t help but think about what that could mean for mass distribution and the individual fan experience. There are big hurdles, and we understand that. But moving to a world where we can have an interactive device in someone’s hands is obviously a north star that we want to work towards.”

What To Expect This Season: Continued Evolution From Week to Week

Although the frequency with which this new real-time data-driven AR telestrator will be used obviously depends on the production team and the game itself, DMED expects the tool to be used more and more as the season progresses.

“The priority for us is always about what [a new technology] does for the storytelling,” says Navarre. “We’re entering the phase where it is getting used by production in real-game scenarios to enhance the audience experience. We’re going to learn a lot every week. That’s why we’ve put [the operators] in a hands-on position [in the truck]: so that we can learn from those initial executions and continue to evolve each week.”

Rooney believes that Platt, Dean, and the entire production team understand the almost infinite data-storytelling capabilities afforded by this new technology and notes that DMED’s platform will be ready for whatever is asked of it in the future.

“As for scaling up the application itself,” he says, “we’re prepared for whatever comes our way. There is a plethora of data and information from Next Gen Stats, and we’ve already built a lot of highlight [templates] that we are going to scale into production as everyone gets more comfortable with it. So definitely look for more.”