New Angles on 2D and 3D Images

Shooting stereoscopic 3D has involved many parameters: magnification, interaxial distance, toe-in angle (which can be zero), image-sensor-to-lens-axis shift, etc. To all of those, must we now also consider shutter angle (or exposure time)? The answer seems to be yes.

Unlike other posts here, this one will not have many pictures. As the saying goes, “You had to be there.” There, in this case, was the SMPTE Technology Summit on Cinema (TSC) at the National Association of Broadcasters (NAB) convention in Las Vegas last month.

The reason you had to be there was the extraordinary projection facilities that had been assembled. There was stereoscopic, higher-than-HDTV-resolution, high-frame-rate projection. There was even laser-illuminated projection, but that will be the subject of a different post.

The subject of this post is primarily the very last session of the SMPTE TSC, which was called “High Frame Rate Stereoscopic 3D,” moderated by SMPTE engineering vice president (and senior vice president of technology of Warner Bros. Technical Operations) Wendy Aylsworth. It featured Marty Banks of the Visual Space Perception Laboratory at the University of California – Berkeley, Phil Oatley of Park Road Post, Nick Mitchell of Technicolor Digital Cinema, and Siegfried Foessel of Fraunhofer IIS.

You might not be familiar with Park Road Post. It’s located in New Zealand — a very particular part of New Zealand, within walking distance of Weta Digital, Weta Workshop, and Stone Street Studios. If that suggests a connection to the Lord of the Rings trilogy and other movies, it’s an accurate impression. So, when Peter Jackson chose to use a high frame rate for The Hobbit, Park Road Post arranged to demonstrate multiple frame rates. Because higher frame rates mean less exposure time per frame, they also arranged to test different shutter angles.

You might not be familiar with Park Road Post. It’s located in New Zealand — a very particular part of New Zealand, within walking distance of Weta Digital, Weta Workshop, and Stone Street Studios. If that suggests a connection to the Lord of the Rings trilogy and other movies, it’s an accurate impression. So, when Peter Jackson chose to use a high frame rate for The Hobbit, Park Road Post arranged to demonstrate multiple frame rates. Because higher frame rates mean less exposure time per frame, they also arranged to test different shutter angles.

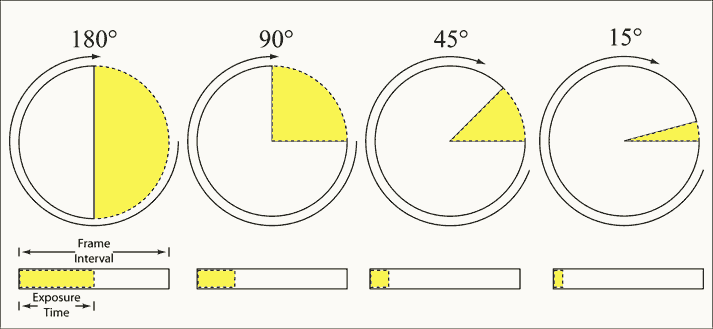

Video engineers are accustomed to discussing exposure times in terms of, well, times: 17 milliseconds, 10 milliseconds, etc. Percentages of the full-frame time (e.g., 50% shutter) and equivalent frame rates (e.g. 90 frames per second or 90 fps) are also used. Cinematographers have usually expressed exposure times in terms of shutter angles.

Motion-picture film cameras (and some electronic cameras) have rotating shutters. The shutters need to be closed while the film moves and a new frame is placed into position. If the rotating shutter disk is a semicircle, that’s said to be a 180-degree shutter, exposing the film for half of the frame rate. By adjusting a movable portion of the disk, smaller openings (120-degree, 90-degree, etc.) may be easily achieved, as shown above <http://en.wikipedia.org/wiki/File:ShutterAngle.png>.

Certain things have long been known about shutters: The longer they are open, the more light gets to the film. For any particular film stock, exposure can be adjusted with shutter, iris, and optical filtering (and various forms of processing can also have an effect, and electronic cameras can have their gains adjusted). Shorter shutter times provide sharper individual frames when there is motion, but they also tend to portray the motion in a jerkier fashion. And there are specific shutter times that can be used to minimize the flicker of certain types of lighting or video displays.

That was what was commonly known about shutters before the NAB SMPTE TSC. And then came that last session.

Oatley, Park Road Post’s head of technology, showed some tests that had been shot stereoscopically at various frame rates and shutter angles. The scene was a sword fight, with flowing water and bright lights in the image. Some audience members noticed what appeared to be a motion-distortion problem. The swords seemed to bend. Oatley explained that the swords did bend. They were toy swords.

That left the real differences. Sequences were shown that were shot at different frame rates and at different shutter angles. As might be expected, higher frame rates seemed to make the images somewhat more “video” like (there are many characteristics of what might be called the look of traditional film-based motion pictures, and one of those is probably the 24-fps frame rate).

At each frame rate, however, the change from a 270-degree shutter angle to 360-degree made the pictures look much more video like. The effect appeared greater than that of increasing frame rate, and it occurred at all of the frame rates.

Foessel, head of the Fraunhofer Institute’s department of moving picture technologies, also showed the effects of different frame rates and shutter angles, but they were created differently. A single sequence at a boxing gym was shot with a pair of ARRI Alexa cameras in a Stereotec mirror rig, time synchronized, at 120 fps at a 356-degree shutter.

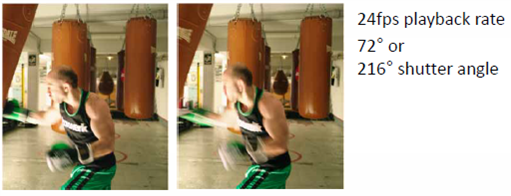

When the first of every group of five frames was isolated, the resulting sequence was the equivalent of material shot at 24 fps with a 71.2-degree shutter (the presentation called it 72-degree). If the first three of every five frames were combined, the result was roughly the equivalent of material shot at 24 fps with a 213.6-degree shutter (the presentation called it 216-degree). It’s roughly equivalent because there are two tiny moments of blackness that wouldn’t have been there in actual shooting with the larger shutter angle.

When the first of every group of five frames was isolated, the resulting sequence was the equivalent of material shot at 24 fps with a 71.2-degree shutter (the presentation called it 72-degree). If the first three of every five frames were combined, the result was roughly the equivalent of material shot at 24 fps with a 213.6-degree shutter (the presentation called it 216-degree). It’s roughly equivalent because there are two tiny moments of blackness that wouldn’t have been there in actual shooting with the larger shutter angle.

As shown above, the expected effects of sharpness and motion judder were seen in the differently shuttered examples. But there was another effect. The stereoscopic depth appeared to be reduced in the larger-shutter-angle presentation.

As shown above, the expected effects of sharpness and motion judder were seen in the differently shuttered examples. But there was another effect. The stereoscopic depth appeared to be reduced in the larger-shutter-angle presentation.

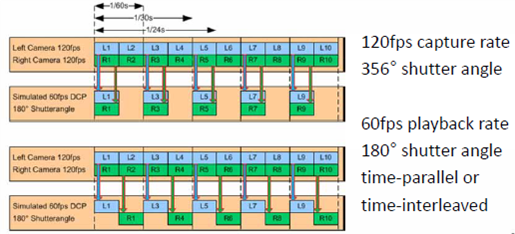

Foessel had another set of demonstrations, as shown above. Both showed the equivalent of 60-fps with roughly a 180-degree shutter angle, but in one set the left- and right-eye views were time coincident, and in the other they alternated. In the alternating one, the boxers’ moving arms had a semitransparent, ghostly quality.

Foessel had another set of demonstrations, as shown above. Both showed the equivalent of 60-fps with roughly a 180-degree shutter angle, but in one set the left- and right-eye views were time coincident, and in the other they alternated. In the alternating one, the boxers’ moving arms had a semitransparent, ghostly quality.

The SMPTE TSC wasn’t the only place where the effects of angles on stereoscopic 3D could be seen at NAB 2012. Another was at any of the many glasses-free displays. All of them had a relatively restricted viewing angle, though that angle was always far greater than the viewing angle of the only true live holographic video system ever shown at NAB.

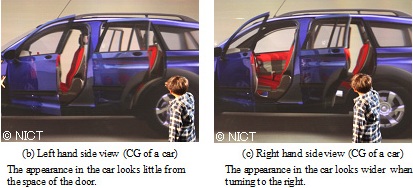

That system was shown at NAB 2009 by NICT, Japan’s National Institute of Information and Communications Technology. It was smaller than an inch across, had an extremely restricted viewing angle, and, as can be seen above, was not exactly of entertainment quality. It also required an optics lab’s equipment for both shooting and display.

That system was shown at NAB 2009 by NICT, Japan’s National Institute of Information and Communications Technology. It was smaller than an inch across, had an extremely restricted viewing angle, and, as can be seen above, was not exactly of entertainment quality. It also required an optics lab’s equipment for both shooting and display.

At NAB 2012, NICT was back. As at NAB 2009, they showed a multi-sensory apparatus, but this time it added 3D sound and olfactory stimulus. And, as at NAB 2009, they offered a means to view 3D without glasses.

This time, however, as shown above, instead of being poor quality, it was full HD; instead of being less than an inch, it was 200-inch; and, instead of having the show’s most-restricted no-glasses-3D viewing angle, it had the broadest. It also had something no other glasses-free 3D display at the show offered: the ability to look around objects by changing viewing angle, as shown below.

This time, however, as shown above, instead of being poor quality, it was full HD; instead of being less than an inch, it was 200-inch; and, instead of having the show’s most-restricted no-glasses-3D viewing angle, it had the broadest. It also had something no other glasses-free 3D display at the show offered: the ability to look around objects by changing viewing angle, as shown below.

There was another big difference between the 2009 live hologram and the 2012 200-inch glasses-free system. There were no granite optical tables, first-surface mirrors, or lasers involved. The technology is so simple, it was explained in a single diagram (below).

Granted, aligning 200 projectors is not exactly trivial, and the rear-projection space required currently precludes installation in most homes. Despite its prototypical nature, however, NICT’s 200-inch, glasses-free, look-around-objects 3D system could be installed at a venue like a shopping center or sports arena today.

Of course, there is something else to be considered. The images shown were computer graphics. There doesn’t seem to be a 200-view camera rig yet.

Tags: 200-inch glasses-free, Fraunhofer Institut, HFR, high frame rate, live hologram, NAB 2012, NICT, Park Road Post, shutter angle, SMPTE, stereoscopic 3D, Technology Summit on Cinema, The Hobbit,

4 comments

Mark,

I have a question for you.

I understand the percentage shutter theory, but I don;t understand why the industry is still using it as opposed to being more direct. For example, why say

60fps at 180deg shutter.. and not say 60fps with 1/120 exposure.

degree shutter means completely different exposure depending on the fps.

I find this very confusing as giving any deg. shutter means nothing without fps.

Why is the industry not just going to exposure (which has a certain image/motion look) and remove the issue of fps in regards to the look/motion blur.

Ie using X exposure, giving a certain look is not necessarily a factor requiring the fps.

Is there any particular reason to refer to it in this way as opposed to exposure time?

Is this just old dogs and how they work applying it to a new world of many frame rates?

With fps now a very variable item in film production should we not move away from deg.shutter?

Can you expand and also give your opinion.

Thanks,

James Gardiner

http://www.cinetechgeek.com

I can think of at least three reasons. One is the cinematographic tradition. I’m currently working on a research project relating to electronic image-sensing technology used inside cameras, and I’ve been surveying many members of the industry about it. Of course, those who developed the technology are familiar with it. Among users, however, those who come from an electronic-imaging background — the ones who use the technology most — don’t seem to know much about it, whereas those who come from a film-imaging background do. Cinematographers have been figuring out what works and what doesn’t a lot longer than videographers, so if they prefer to speak of angles that’s good enough for me.

Another reason may be found in my post above. Park Road Post demonstrated multiple frame rates and multiple exposures at the SMPTE Technology Summit on Cinema, and in each case the change from 270 degree to 360 degree caused the greatest impact relative to a video-type look, regardless of frame rate. If those results had been reported (rounded to three digits) as exposures of 0.03 second versus 0.04 second at 24 fps, 0.02 vs. 0.02 second at 48 fps, and 0.02 vs. 0.01 second at 60 fps, would you have had a clue of what was going on? Even with more digits, that would be 0.04167 vs. 0.03125 at 24 fps, 0.015625 vs. 0.02083 second at 48 fps, and 0.0125 vs. 0.01667 second at 60 fps. Is that clearer than saying that, regardless of frame rate, shifting from 270-degree to 360 degree created more of a video-type look?

Finally, even in this supposedly all-electronic era, some of the latest and greatest cameras using electronic image sensors still offer mechanical rotary shutters.

TTFN,

Mark

Thanks Mark,

But I must admit this effect you have been talking about in that full open 360 degree shutter makes it look more video at any fps.

I have been racking my head all day on that discovery and came up with a question.

If we firstly take out the issue that people do not seem to like the video look. And just talk about the result.. the results seem to indicate that part of the video look appears to be the fact that there are no visual steps between frame 1 and 2.

If the shutter is 360 open, the motion blur would then overlap/join to the previous frame. If there is a less the 360 degree shutter, the motion blurr will have a step or gap between when it stops and when it starts on the next frame.

This is the main different I could come up with. Now is this step affect a “what we are use to” result of the historic film process. Could this hyper-reality result of the 360degree shutter be exactly what we should expect. Would it be welcomed by a person who has never seen film before. Ie is this a bad thing or just a issue with those not use to it. (Those use to traditional Film).

You saw it and have great analogical eyes. What do you think?

This is a very interesting result to me as I am a big believer in HFR 2D and 3D. I think we will be shooting more HFR very soon, much more then 3D.

Results like this do make me wonder..

Wonder if this is more of a generational thing and not like going from SR to Digital Audio. (Universally liked). Going HFR is a similar transition for your eyes.

If anything it shows us how different our ears are to our eyes in the way we process information.

Thanks,

James

Thanks for the comment on my eyes, but they belong to an engineer, and I hesitate to make aesthetic comments. That’s what artists are for.

Once I worked on a classical-music television show in which the director chose to use an effect that I considered awful. I was not alone; everyone on the technical crew considered it awful, too. But the audience loved it, and the conductor said he found it so powerful that it moved him to tears.

Here’s something I wrote in a previous post this year, “Smellyvision and Associates”:

“Back in the publicized 70-mm film era, special-effects wizard, inventor, and director Douglas Trumbull created a system for increasing temporal resolution in the same way that 70-mm offered greater spatial resolution than 35-mm film. It was called Showscan, with 60 frames per second (fps) instead of 24.

“The results were stunning, with a much greater sensation of reality. But not everyone was convinced it should be used universally. In the August 1994 issue of American Cinematographer, Bob Fisher and Marji Rhea interviewed a director about his feelings about the process after viewing Trumbull’s 1989 short, ‘Leonardo’s Dream.’

“’After that film was completed, I drew a very distinct conclusion that the Showscan process is too vivid and life-like for a traditional fiction film. It becomes invasive. I decided that, for conventional movies, it’s best to stay with 24 frames per second. It keeps the image under the proscenium arch. That’s important, because most of the audience wants to be non-participating voyeurs.’

“Who was that mystery director who decided 24-fps is better for traditional movies than 60-fps? It was the director of the major features ‘Brainstorm’ and ‘Silent Running.’ It was Douglas Trumbull.

“As perhaps the greatest proponent of high-frame-rate shooting today, Trumbull was more recently asked about his 1994 comments. He responded that a director might still seek a more-traditional look for storytelling, but by shooting at a higher frame rate that option will remain open, and the increasedspatial detail offered by a higher frame rate will also be an option.”

At the NAB/SMPTE Technology Summit on Cinema, Phil Oatley of Park Road Post carefully pointed out that what we saw was experimental footage. There were no costumes, sets, real actors, real direction, or cinematographic decisions to create a particular look. I believe that, with those applied, the sensation would have been different. Conversely, there have been reports by some who say they have seen scenes from the movie that they don’t like the look.

All of that being said, my research shows that perception is learned (there will be more about that in my next post), so, for whatever my opinion is worth, I tend to agree with you about generations. People who grew up in the era of monochrome movies feel differently about color vs. black-&-white than do those who grew up in the color-movie era. Yet, even today, when it’s hard to find a monochrome camera, directors sometimes intentionally choose black-&-white.

TTFN,

Mark

The comments are closed.